Digital trust is no longer a nice-to-have, it’s the new frontline of brand loyalty.

As AI reshapes our digital experiences, it has also surfaced a long-brewing tension: how personal data is collected, used, and profited from. Consumers now ask sharper questions:

What is my data being used for? Who’s benefiting? Can I trust the systems shaping my digital life?

These are the concerns defining how people interact with brands. Consumers have become active participants in the data economy: they know their data has value, and they’re ready to walk away when expectations around privacy and trust aren’t met.

The State of Digital Trust Report 2025 shows just how far this shift extends. Trust has moved from a compliance checkbox to one of the main drivers of brand engagement, loyalty, and differentiation. For marketers, this moment comes as both a challenge and an opportunity.

In this article, we’ll unpack the report’s first chapter, “The Algorithm Effect: How AI Turned Data into a Trust Issue,” and explore why digital trust has become one of the most valuable currencies in marketing.

AI and the rise of data distrust

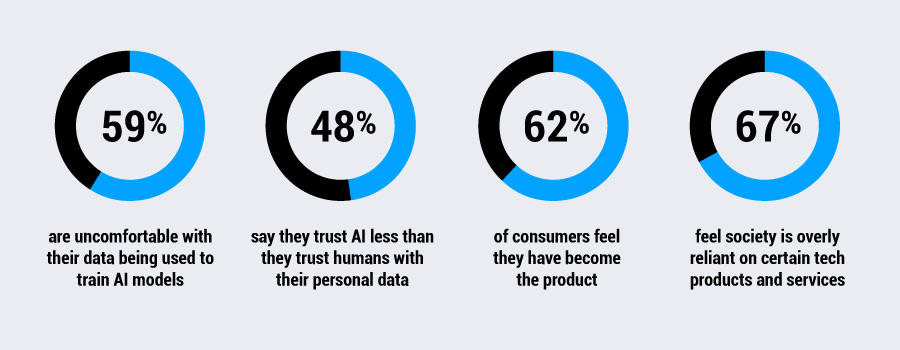

Consumers are now highly aware that their personal data powers many digital experiences, including most of the AI tools that have recently flooded the internet. The report shows that 59 percent are uneasy about their data being used to train AI.

Widespread reports of data being scraped from websites, used without consent, or repurposed for AI applications have fueled anxiety and a demand for greater transparency. Opaque data practices have created a crisis of trust. Users want to know:

- Where is the data coming from?

- Was it collected fairly and ethically?

- Could it expose their private information, or perpetuate bias?

It’s not because they oppose technological progress, but because they feel left in the dark. When companies disclose what data is used to train AI and how, consumers feel respected and empowered.

More frequently, consumers hear about data being fed into black-box models and lawsuits alleging unauthorized use. The fallout isn’t that they lose interest in innovation, it’s a crisis of lost trust in institutions and brands.

It’s not enough for companies to say they value privacy. Users want to see it in action.

Transparency means users can assess potential privacy risks, understand whether their information is being handled safely, and decide if they want to engage further with the brand.

Trust has never been easier to lose, or more valuable to earn

Data literacy is on the rise, and so are consumer expectations:

- 42 percent of consumers now say they “always” or “often” read cookie banners

- 46 percent say they accept “all cookies” less often than three years ago

This signals a growing consumer agency over their own data governance, and redefines consent as an ongoing dialogue instead of a one-time ask. Brands can no longer count on default acceptance. Users are paying attention, asking sharper questions, and more importantly, looking for proof of responsible data practices.

With AI-fueled scrutiny and unprecedented visibility into corporate behavior, reputation now moves at the speed of the internet, shaped in real time by consumer sentiment, social media, and shifting public narratives. One misstep, poorly handled privacy incident, or misleading claim can damage years of brand equity.

But for those who rise to the challenge, the rewards are clear:

Meeting that challenge means treating trust not as a message, but as a practice that embeds transparency into every user interaction, designing consent flows that respect real choice, and closing the gap between privacy policies and users’ digital experience.

In this new landscape, it’s the why and how of your data practices — not just the what — that define market leadership.

Privacy-Led Marketing: trust as a growth strategy

As data literacy grows and AI raises new questions about transparency and ethics, marketers are rethinking how they build lasting relationships. One strategy gaining traction is Privacy-Led Marketing, a trust-centered approach that meets this moment with integrity and impact.

Where traditional marketing often focused on reach and performance, Privacy-Led Marketing prioritizes respect, clarity, and long-term trust. It goes beyond just telling consumers your values, it embeds those values into the mechanics of how you collect, use, and communicate about data.

The report shows that:

- 44% of consumers say clear communication about data use is the #1 driver of brand trust

While…

- 77% don’t understand how their data is collected or used, possibly due to the combination of unclear communication from brands and low data literacy among users

On the surface, these numbers might seem at odds. But together, they reveal an important insight: people want to understand, and they reward brands that make the effort to help.

This points to a lack of accessible, transparent storytelling around data. By demystifying how data is handled, marketers can close the knowledge gap and earn user trust and loyalty.

Brands that embrace Privacy-Led Marketing build relationships that are not only more resilient, but also future-proof at a time when regulations are catching up to consumer expectations, and AI continues to raise the bar for ethical practices.

PLM isn’t about saying “we care about your privacy.” It’s about proving it at every touch point.

Explore all insights on The State of Digital Trust in 2025.