“Protect the children!” is one of the few inclusive rallying cries in government and tech. Regulators in the US, Europe, the UK, Australia, and beyond are racing to roll out rules. The intent is good. No one wants digital spaces that are less safe for kids.

But the execution often misses. Policies like social media bans, strict age and functionality restrictions, and ID verification can create new risks, deepen inequities, and strain businesses without delivering the promised safety.

Too often, the people most fluent in how the internet works — trust and safety teams, community managers, youth advocates, even young people themselves — aren’t central to policymaking.

The results: friction for companies, poor outcomes for kids, and headlines that reward tough talk more than effective solutions. For marketers and executives, there’s a second order problem: broken measurement, higher compliance costs, and brand risk from policies that look protective but don’t work in practice.

So, are these measures actually protecting children, or just creating new risks while adding unnecessary burdens for smaller businesses, stifling innovation, and limiting the effectiveness of digital marketing? What’s the smarter and more sustainable path?

Key takeaways

- Protecting children online centers around restricting access to platforms and the collection and use of kids’ data.

- Many lawsuits over unauthorized access and use of children’s data target tech giants and popular media platforms like Google and TikTok.

- ID verifications and access bans are often easy to bypass, risky for privacy in their data collection requirements, and harm UX.

- Heavy compliance favors Big Tech, which has the resources to meet requirements and shape policy, while startups struggle to keep up.

- Gen Z and Alpha say bans ignore their lived online reality and they often actively shape their online experiences.

- Marketing suffers from poor safety initiatives, including broken attribution, lost conversions, limited targeting, wasted spend, and low trust.

- Real solutions lie in collaboration among lawmakers, corporations, parents, advocacy groups, educators, and kids, with digital literacy, better design, and stronger standards.

The political and legal landscape around “protecting the children”

Concern about kids online isn’t new. The Children’s Online Privacy Protection Act (COPPA) in the US dates back to 1998 — before modern social platforms existed. What’s changed, particularly in the last five years, is volume and intensity.

Laws and policies now push parental consent requirements, age checks, content and functionality restrictions, and even platform bans. Some target entire categories, like social media. Others focus on specific sectors, like pornography.

Two big forces fuel the urgency:

- Ubiquity of access: Kids have smartphones. Massive game and creator platforms like Minecraft, Roblox, Fortnite, and EA Sports attract millions of minors daily.

- Litigation and headlines: From alleged data misuse to manipulative algorithms to mental health harms, lawsuits keep pressure high and raise expectations that “something must be done.”

The pendulum has swung toward more restrictive approaches. Whether they work as advertised (promised?) is another story.

However, there have also been legal challenges to a number of them, including in Texas, Florida, Tennessee, and California. This is likely to remain ongoing.

Who is responsible for policing kids’ activities online anyway?

We can all agree there are places online that kids shouldn’t go and interactions they shouldn’t have. But the internet doesn’t have a single locked door with a bouncer. Identity and location verification are improving, yet they remain blunt instruments that are often unevenly enforced and easy to work around.

And human nature matters. Tell kids they can’t do something and many will spend more energy finding an access loophole than they would have on the site, game, app, etc. in the first place. The forbidden is always cooler.

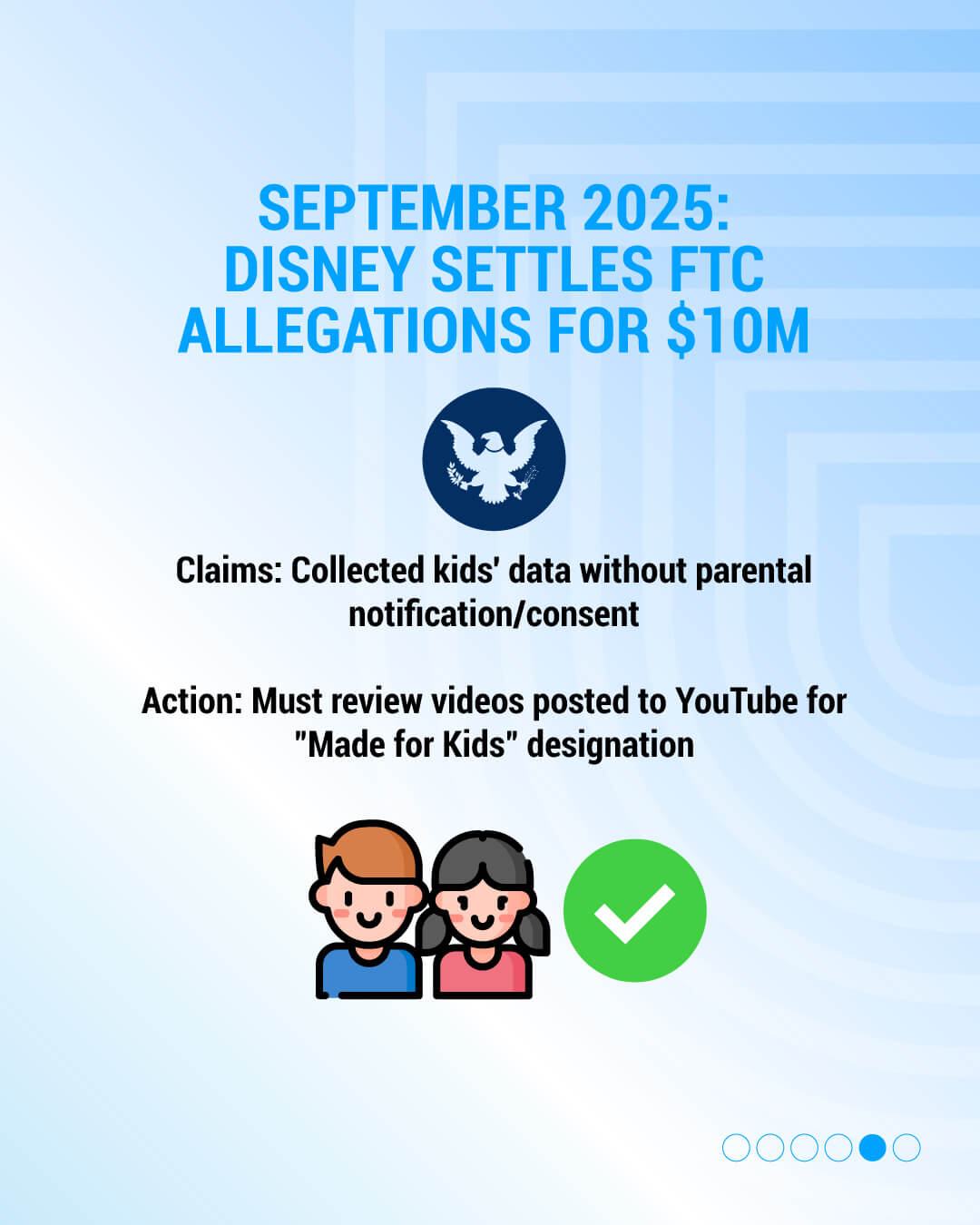

Are lawsuits actually a deterrent?

Big tech platforms are familiar with global penalties for manipulative practices and unlawful data use. Fines can be massive and settlements headline-grabbing. But have they fundamentally improved child protection outcomes?

There’s a strong case that the status quo isn’t delivering. Enforcement is uneven. Litigation and the inevitable appeals take years. Platform incentives are mixed. Real progress requires coordinated effort among parents, kids, regulators, and platforms — plus continued investment in product and operational changes.

That’s a tough alignment to establish, and it often threatens revenue, which dampens corporate enthusiasm, to say the least.

The other side: Why restrictions, bans, and verification requirements are a good thing

Despite the pitfalls, there are clear benefits to gatekeeping when it’s done well with a more 360-degree perspective.

Parents get usable tools: Access and functionality controls and guardrails help manage risk, especially when they can’t monitor every activity or interaction or don’t know a platform well.

Creators and adult-only spaces are safer: Adult communities and businesses don’t want minors present, either. Strong verification protects the businesses, spaces, and members, too.

Brand safety improves: Businesses can avoid adjacency to unsafe content and minimize the chance of unconsented children’s data in their ecosystem.

Regulators can prioritize: Clear, evolving standards reduce whack-a-mole enforcement and help focus limited oversight resources.

Trust becomes a growth lever: Robust protections can be a selling point. Adult users are more likely to subscribe or transact when they trust the audience is truly adult. Same for parents when spaces are kid-only or at least kid-friendly.

In other words, smart restrictions can both improve audience safety (kids included) and support positive brand reputation and trust-building. The question is implementation: Whose responsibility is it, and how can we do it without continuing to leave gaps or creating collateral damage?

Reality check: Why do measures to protect kids online often fail?

As established, there’s no shortage of laws, policies, vigilant parents, and technical implementations. And yet, protecting kids online remains an unsolved problem.

Workarounds are too often easy.Digitally native kids can “borrow” a parent’s ID or sibling’s login, spin up new accounts, go to less policed platforms, or mask locations with tools like VPNs. And many sites, apps, and platforms still just lack effective — or any — access controls.

Data is valuable, and kids’ data even more so.Targeted advertising to children is restricted or banned in many places, but the behavioral data itself is gold. Kids are a fresh cohort: still forming habits, still developing critical thinking, and highly responsive to social proof.

That’s a tempting audience for engagement-optimized systems. Any parent can tell you that a kid who’s decided they really want something they’ve seen is relentless.

Resource disparity is real. Platforms can outspend parents, advocacy groups, educators, and even many regulators. As data scientist and early Facebook employee Jeff Hammerbacher put it:

The best minds of our generation are thinking about how to make people click ads. That sucks.

Verification has always been a blunt instrument. Many systems demand a surprising amount of sensitive personal data to prove age, location, and other restrictive factors.

That creates new privacy and security risks, and the data can become a target for abuse or breaches. Meanwhile, the friction often pushes legitimate users away without necessarily keeping determined kids out.

When restrictions cause harm

While the intention of policy, verification requirements, bans, and other initiatives can be well-meaning, there’s often a “bottom trawling” effect. These efforts often not only fail to protect kids, they can actively harm them and other marginalized groups.

Strict ID rules often hit people who lack easy access to “Real ID”, including those with name or gender changes, immigrants, people without stable housing, and domestic violence survivors who must keep personal information tightly controlled. LGBTQ+ teens (and adults) can lose critical community and support when access is gated poorly.

Age and identity checks can expand on the data that companies collect. Even if the goal is safety, companies can end up with a trove of sensitive information linked to real identities, potentially from minors, along with new incentives to reuse that data for advertising or growth.

Authoritarian regimes have also used ID-linked systems to track, intimidate, or detain people. When everything ties back to a legal identity, anonymity — and safety — becomes harder for ordinary users who lack protective resources commensurate with what regimes have to counter them.

Additionally, if a platform becomes too hard or unpleasant to use, people leave. For marketers, that means carefully built tracking and ecosystems fail, resulting in lost reach, broken attribution, lower data quality, and fewer conversion opportunities. The brand hit can be immediate, and on top of it rebuilding trust takes far longer.

What do “the kids” say?

For Gen Z and Gen Alpha, the internet isn’t a novelty. It’s the water they swim in. Many have never known a world without broadband. Plenty see heavy-handed restrictions as paternalistic and out of touch, especially when drafted by people who don’t use the same platforms or understand the culture.

They don’t see themselves only as consumers or victims; they’re creators, community builders, and often savvy businesspeople. Many already shape their feeds, limit usage, or avoid certain apps.

When policy ignores their lived experience, it misses effective levers like literacy, design choices, and social norms — where kids themselves can be powerful partners (if taken seriously.)

Who can do a better job?

Top-down rules will always be part of the picture, whether regulations or platform policies. But thriving online communities show that strong, consistent moderation and community norms can outperform one-size-fits-all mandates.

Healthy spaces do active, everyday work: creating and enforcing clear rules, providing fast and solid responses to abuse, enabling meaningful appeals, and providing user tools that actually work.

Those practices are inarguably hard to scale, but when they’re present and prominent, communities self-regulate more effectively than any dry regulation or jargon-laden policy can.

The tightrope that businesses and marketers have to walk

For brands and growth teams, restrictive rules have real costs:

- Shrinking addressable audiences and lower engagement when friction rises

- Broken attribution and distorted measurement when age gates and consent flows are clunky or widely bypassed

- Less and lower quality data plus harder segmentation, which raises CPA and depresses ROI

- Greater legal and PR exposure if minors slip through or if data collection outpaces consent

That said, if kids aren’t your audience, excluding them is absolutely desirable. Cleaner data, lower legal risk, fewer brand safety surprises, less wasted ad spend. Plus, increasingly, advertisers, payment processors, and distribution partners demand proof of audience eligibility (and consent.) Clear, effective gating can open doors to premium partnerships and other growth opportunities.

And remember: when headlines hit, the splashiest narrative wins. “Company endangered kids” travels farther and faster and fuels more outrage than “Company built a careful, compliant customer experience.” It’s on leaders to build both guardrails and a safety narrative that withstands real, live humans as well as scrutiny.

The costs of compliance and change and who bears them

Implementing controls and redesigns take time, resources, and money. Smaller organizations often have a very limited amount of these, and minimal access to lawyers or lobbyists who can shape rules, navigate enforcement, or fight allegations.

But large tech platforms do. They can fund multi-year legal fights, geoblock jurisdictions, or set de facto global standards through product choices. Is it fair to expect them to carry more of the load? Given the scale of their gains, yes.

But this creates a familiar dynamic: compliance favors incumbents. Big platforms already have the audience and engineering muscle to implement controls quickly and thoroughly — if they choose. If a few users churn, the business barely notices. Meanwhile, new entrants struggle to launch, let alone to build safer, better products.

Consumers also say they value strong safeguards, but many won’t tolerate extra steps that delay gratification or limit functionality. The gap between stated preferences and behavior too often stifles innovation and entrenches the status quo — to the potential detriment of all audiences, kids included.

When the dust settles, the combination of heavy rules and sticky habits can lock in the very systems we want to reform, leaving individuals — including kids — as the product that funds big companies’ war chests and ever more addictive online experiences.

So how do we actually protect kids online?

A better path blends smarter policy, better product design, and shared accountability — without sacrificing privacy, innovation, or business fundamentals.

Shift the framing to move from surveillance and blunt bans to digital literacy for everyone, from regulators to kindergarteners. Teach how algorithms shape feeds, where security gaps often are and best practices to patch them, how to spot manipulative design and identify corporate agendas, and how to set personal boundaries online.

Bring young people to the table. To borrow from the disability rights movement: “Nothing about us without us.” Include Gen Z and Alpha voices in drafting and testing policy and mechanisms, alongside trust and safety pros, community managers, parents, and educators.

Invest in privacy by design that favors age-appropriate design and proportionate ID verification over broad and sensitive ID collection. Use privacy-preserving signals and device-level checks that minimize data demands. Make privacy-centered defaults the norm for minors and communicate about them clearly to parents.

Build real community through long-term viability by funding moderation and innovation at the same levels as revenue growth investments. Provide clear rules, consistent enforcement, fast reporting tools, and transparent appeals. These can protect kids more reliably than endless policy scrolling or rickety gatekeeping.

Align the business by training marketing and growth teams on Privacy-Led Marketing, including consent-first capture, durable zero- and first-party data, contextual targeting, incrementality testing, and other functions to reduce dependence on invasive tracking (including of children.) Design funnels that work within guardrails rather than trying to route around them.

Measure what matters and don’t mistake checkbox controls for real safety. Track leading indicators: abuse response times, minor account leakage rate, policy evasion attempts, community guideline adherence, and user trust scores. Report them like you report DAU/MAU and revenue.

Coordinate and don’t duplicate. Standards bodies, industry groups, and regulators should publish interoperable guidance so companies aren’t rebuilding age checks or safety tooling from scratch in every market or every time technology changes. Smaller firms need off-the-shelf options that meet requirements without breaking the bank.

Protecting kids online is important and can’t be left to what in the real world too often amounts to theater. Platform bans and ID checks may satisfy headlines at first sniff, but they won’t carry the day alone — and they can introduce new harms.

Winning strategies blend smarter design, privacy-preserving assurance, strong community practice, and meaningful youth input.

There must be real consequences for companies (and regulators) that don’t take safety, security, and compliance seriously. It’s never been easy and still isn’t, but done well, companies can protect kids online, strengthen business operations and legal compliance, build trust with all audiences, and bolster brands.