Every click, scroll and swipe is a small act of digital trust. But that trust is too often manipulated by elements like pre-ticked consent boxes, misleading buttons, and endless loops that make opting out much harder than buying in.

These practices are known as dark patterns, and they’re the equivalent of digital deception. They chip away at user trust one design choice at a time.

Marie Potel-Saville knows a better way: using AI to detect and redesign dark patterns at scale. She’s the founder of Fair Patterns, an AI-powered tool that helps companies spot and fix manipulative patterns in their online channels.

Here’s how it works: Using machine learning trained on thousands of interface examples, the platform scans websites and apps to flag elements that could mislead users. It then suggests compliant, fair design alternatives based on UX and legal standards, helping teams redesign for clarity and consent.

The results that Fair Patterns is seeing not only bring more agency to users, but also translate to measurable loyalty and results for brands.

In this conversation, Marie shares why the future of growth depends on transparency and practical steps for brands to build digital experiences rooted in meaningful consent.

__________

Fair patterns offer a growth opportunity

Brunni: The term ‘dark patterns’ is now widely recognized, but you speak about ‘fair patterns’ instead. Can you explain what that means and why it matters to flip the narrative?

Marie: Fair Patterns is a concept we created over three years of research and development as a countermeasure to dark patterns. The idea is to create interfaces that empower users to make their own free and informed choices. It’s inspired by the UK’s Competition and Markets Authority’s proposed principle of digital fairness, and grounded in the idea that humans should regain their agency online.

Each of us should be able to make decisions online based on our own preferences without undue interference. We’re not in favor of nudging users towards privacy or consumer protective measures (apart from vulnerable users like children), for two main reasons:

- Nudging is paternalistic.

Who decides what’s good for us? This question is already difficult in democracies, but even more so under the current threats to the rule of law and democracy in the world.

- People don’t learn anything when they are being nudged.

There is a huge gap in knowledge and understanding of the digital economy, of the attention economy that’s behind it, of targeted advertising, and so on. It’s critical to close this gap, even more so as humans increasingly interact with AI.

Brunni: Some leaders still see ethical design as a compliance cost. How do you help them see it as a growth opportunity instead?

Marie: That’s a classic! I heard this at the beginning of my career as a competition law attorney. The reality is that trust and transparency actually drive at least 10 percent more growth annually than tricks and traps, according to a McKinsey study.

McKinsey also found that 46 percent of consumersconsider switching to another brand when a company’s data practices are unclear.

We’re also seeing it in our own data. We’ve found that fair patterns are more profitable than dark patterns after only 6 months. The reason is simple: Dark patterns like subscription traps create a mechanic turnover boost, but it’s very short-term. As soon as people realize they’ve been tricked, they’re furious and they walk away.

For example, one study showed that users exposed to dark patterns express 56 percent less brand trust, and another demonstrated that consumers blame companies for employing dark patterns.

The U.S. Federal Trade Commission (FTC), which looks out for unfair business practices, recently secured a USD 2.5 billion settlement with Amazon for using dark patterns in subscriptions. This shows how noncompliance costs are simply skyrocketing.

What’s also interesting is that USD 1.5 billion of that settlement will be reimbursed to customers who were tricked. This is a very strong signal that the era of getting away with deceiving customers is over.

How to implement ethical design, every day

Brunni: A big part of your work is about designing for consent and transparency, and fighting what you describe as online mass deception. What does it take for companies to design experiences that truly respect user choice instead?

Marie: It’s simpler than people think. Ethical design is basically “good UX design,” and it means following key principles like guidance, easy navigation, and control for errors.

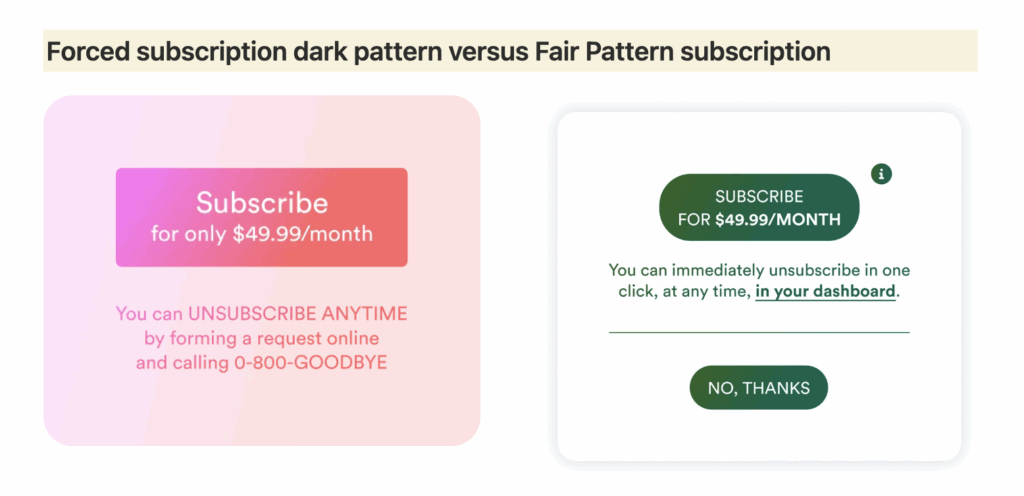

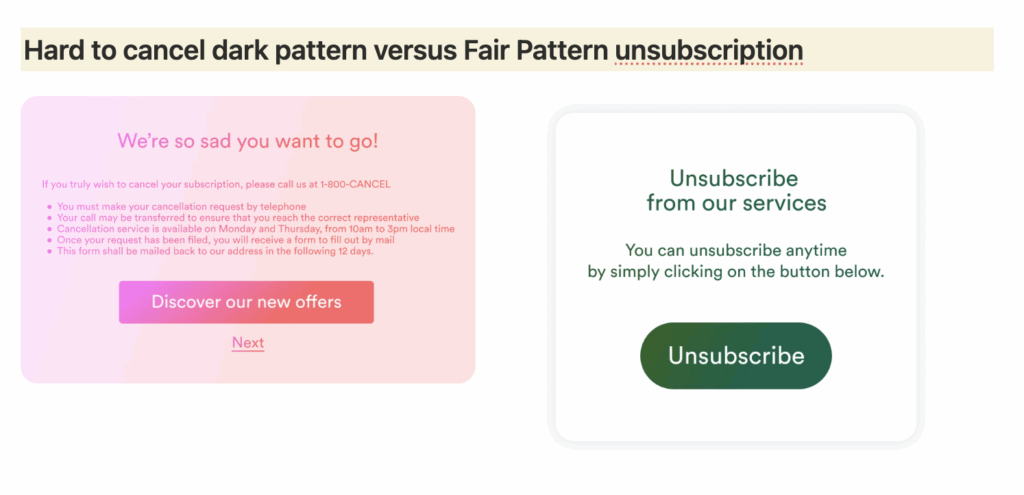

When we created our library of Fair Patterns back in 2023, we established a list of criteria. For example:

- Provide clear buttons to accept or reject an offer.

- Fight the salience bias: Present equally visible buttons, in the same color, to accept or reject cookies or an offer.

- Use plain language: Follow the plain language ISO norm of 2023, and its application to legal communication in 2025.

- Explain the consequences of choices: Do so in simple terms, so users can make meaningful choices.

- Fight consent fatigue: Give meaningful and straightforward controls to users. For example, with privacy dashboards that only take a few seconds to set up and update.

In the example with Amazon, the FTC not only ordered the company to stop using dark patterns in Prime, but also to:

- Provide clear buttons to reject Prime

- Clearly explain that Prime is a subscription, including what the annual fee is and how it renews

- Create an easy way for customers to unsubscribe

This is basically the design we’ve been advocating for years!

Learn what transparency really means to your customers, why it’s not a one-time achievement, and how Privacy-Led Marketing can help.

The future of fair patterns

Brunni: Fair patterns is part of a larger conversation about the future of tech governance and digital dignity. What role do you see design playing in systemic change?

Marie: Indeed, there is a much bigger conversation about digital dignity, which clearly implies human agency.

With the rise of AI agents to do pretty much anything, including navigating on the web to book tickets or do grocery shopping, two dimensions of design are more important than ever:

- Privacy UX design, which empowers users to fully understand the privacy implications of these agents. User-conducted research and testing of these agents’ privacy controls is important because regulators increasingly require user testing results to judge “clarity.”

- Fairness by design. Currently, most LLMs use dark patterns: they embed sycophancy (flattering the user), anthropomorphism (mimicking human traits or emotions), and brand bias (elevating the chatbot’s own company or product). So it’s no longer about “just” ensuring that the UX design is fair, but also that the LLMs used to create these designs, navigate, or do anything else for us are free from manipulation and deception. That’s the next frontier we’re working on.

Brunni: If we look 5–10 years ahead, what would success look like for you? What does a world built on fair patterns instead of dark ones actually feel like for users?

Marie: Love this question! Success for us means that all humans regain their ability to make free and informed choices online and in any human-computer interaction.

In other words, it’s not just the ability to avoid being tricked by cookie banners into being tracked, or to avoid accidentally subscribing to something, but also to maintain or regain the ability to think critically when interacting with AI.

Humans have always had the ability to solve complex problems by ourselves, so let’s not delegate this precious skill.

________

Marie Potel-Saville is an impact entrepreneur, the founder & CEO of Fair Patterns, the AI solution that finds and fixes dark patterns at scale, and Amurabi. She is also the Paris Chair of Women in AI Governance, and an expert in dark patterns with the European Data Protection Board. She’s a former lawyer and GC, and teaches at Singapore Management University, Sciences Po, and Paris II university. Marie is also an advisory board member at the BASIL research initiative, the Serpentine Galleries, and Pickering Pierce.