App stores are packed with free-to-play games, but “free” is never the whole truth.

Behind the vibrant characters, power-ups, and competitive leaderboards lies a sophisticated business model. Players might not hand over their credit cards, but they’re definitely still paying. The currency? Their data.

Every tap, break, or near-purchase serves as valuable information that feeds targeted advertising, monetization strategies, and future game development. This data-for-entertainment exchange has become the foundation of mobile gaming economics, but it comes with risks that extend far beyond privacy policies.

So the question isn’t really whether players are trading their data for “free” games anymore. They are. What matters now is whether developers can be honest about that trade-off while still building something that benefits everyone involved.

The economics of “free” games

In mobile gaming, “free” is the hook that attracts players. But behind every free download is a business model designed to make money elsewhere. Developers compete for attention with thousands of other titles, and they need to make money somehow.

Most games fall into one of three categories:

- Freemium: free to download, with in-app purchases that speed progress or unlock extras

- Ad-supported: funded by ads, often targeted using player behavior or demographics

- Hybrid: a mix of in-app purchases and data-driven ads

These models are necessary to keep free games alive.

After launch, developers still need steady revenue to handle updates, maintain servers, and fund marketing efforts. With thousands of new games releasing every month, a solid monetization strategy is what determines whether a game survives or gets lost in the crowd.

Data has become the fuel powering this entire system. Studios track engagement patterns, session lengths, and spending triggers to optimize player experience and identify monetization opportunities. Advertisers pay premium rates when they can reach precisely targeted audiences at optimal moments.

The mechanics work when balanced properly: players receive ongoing entertainment and content updates, while developers secure resources to maintain and improve their games. But when that balance tips — when players feel manipulated or their privacy gets compromised — the costs compound quickly through negative reviews, uninstalls, and lost revenue.

The ideal scenario is simple: players enjoy the game, and developers use data responsibly, keeping players informed from the start.

What data are players actually giving up?

When players install a game and tap “accept” on the terms and conditions, they often don’t realize how much information is exchanged in the background. A free game may never ask for a credit card, but that free game is still gathering personal information and using it to shape both how the game works and how it makes money.

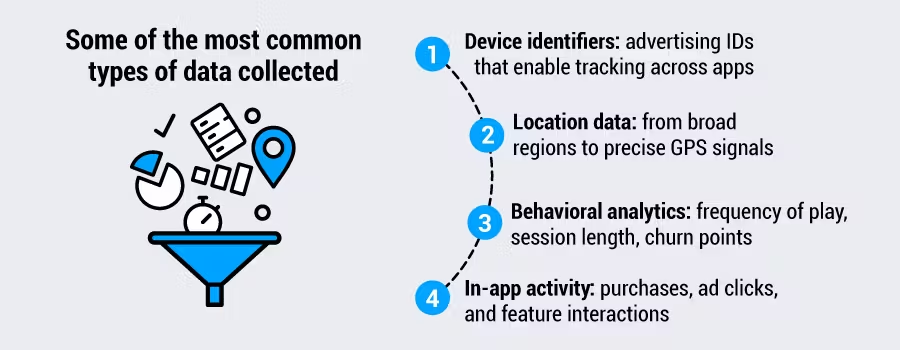

Some of the most common types of data collected include:

- Device identifiers: advertising IDs that enable tracking across apps

- Location data: from broad regions to precise GPS signals

- Behavioral analytics: frequency of play, session length, churn points

- In-app activity: purchases, ad clicks, and feature interactions

Beyond these basics lies an even more valuable layer: inferred data. This is information that isn’t collected directly, but is pieced together from how players behave. For example, predictions about who’s likely to make a purchase or which players are about to quit.

Developers and advertisers look for patterns that reveal intent or predict behavior. If a player logs in every morning before work, the game can serve ads that align with that routine. If someone regularly quits the game after level 20, designers know where to adjust difficulty or add incentives.

In other words, free games aren’t just observing what players do — they’re learning why they do it. That information gets turned into detailed customer profiles that are worth a lot of money to advertisers.

A recent study by Kröger, Raschke, Percy Campbell, and Ullrich titled “Surveilling the gamers: Privacy impacts of the video game industry” explored how modern games collect and analyze player behavior in ways comparable to social media platforms. Scholars warn that the industry’s ability to profile players with such precision should be treated with the same level of scrutiny applied to other digital ecosystems. These profiles are a source of information that is powerful, but also highly sensitive.

When data is collected without clear consent or is hidden behind vague disclosures, players feel misled. Once people sense their personal lives are being sold, the relationship with the game breaks down.

According to The State of Digital Trust in 2025, Privacy and security are now central to trust. Players, like any digital consumer, are clear about what they expect in return for sharing their data. Trust is the baseline for earning attention, engagement, and long-term loyalty.

For many game studios, the challenge is balancing business needs with player expectations. Data can help refine games, improve user experience, and fund development, but only if it’s gathered with transparency. Otherwise, what started as “free” entertainment can end up costing developers their reputation and their revenue.

The real cost for developers

On paper, collecting as much data as possible looks like a win. More data should mean sharper targeting and stronger monetization. In reality, relying on data collected without consent often backfires. Using dark patterns or tactics like burying consent options, making “accept all” the easiest choice, or overloading players with pop-ups might push short-term data collection rates higher. But the positive impact rarely lasts.

As players become more privacy-aware, they notice manipulative design and respond by deleting apps, leaving negative reviews, or simply refusing to opt in.

Regulatory and platform risks

It’s not just about how players feel. Privacy laws around the world are raising the bar for how data can be used, and the consequences of getting it wrong are serious. At the same time, Apple and Google have introduced stricter rules on transparency, consent, and tracking, making privacy compliance a condition for staying in their stores.

If developers fall short, they risk losing access to ad networks. They may even see their games pulled from app stores. These hits can wipe out visibility, distribution, and revenue overnight.

The performance paradox

There’s also a trap when it comes to marketing performance. Collecting more data doesn’t automatically improve campaigns or monetization. In fact, low-quality data gathered without real consent often skews attribution and makes ad spend less effective. In contrast, when players choose to share their data, the insights are more accurate and actionable. That means smarter targeting, clearer attribution, and ultimately a stronger return on ad spend (ROAS).

Why better consent pays off

The cost of “free” games doesn’t just fall to players. Developers also pay the price if privacy is treated as a checkbox instead of a strategy. By building transparency into consent, studios flip the equation: less churn, better data, and stronger campaigns.

In a crowded market, building relationships based on trust can be the edge that turns a short-lived hit into a lasting business.

The player perspective: trust and control

As privacy awareness grows, players want to understand why certain permissions matter and whether their choices are respected.

The key differentiator isn’t whether data is collected, but whether players feel informed and empowered in the exchange. Players often willingly share data when the value proposition is clearly explained and when they maintain control over decisions. It comes down to whether they feel tricked into giving their data. Being upfront about how data supports gameplay or keeps the game free creates a sense of partnership rather than exploitation.

Privacy, then, becomes part of the game itself. Just as graphics and design shape how a game feels, so does the way consent is requested. Studios that make an effort to handle this thoughtfully don’t just comply with rules, they build loyalty.

Privacy as a business advantage

For years, many developers treated privacy like a checkbox: something you had to do, but never the part of the job you looked forward to. That view is changing. Privacy is no longer just about avoiding fines or meeting advertising platform rules. It’s becoming a way to build stronger, more sustainable businesses.

When players trust you, they stick around longer, spend more, and tell their friends. In a crowded market in which hundreds of new games launch every week, loyalty is one of the most valuable currencies a developer can have.

There’s a business upside, too. Data that players choose to share is more reliable, and therefore useful. Marketing campaigns perform better, attribution is cleaner, and investors can see that a studio is building for the long term rather than chasing quick wins.

The future of free-to-play doesn’t mean abandoning data. It means changing the way it’s collected and explained. The developers who succeed will be the ones who treat players like partners, not products, and know that growth and privacy can work hand in hand.