When OpenAI teased Sora, its latest text-to-video model, the internet was flooded with videos so realistic that even digital natives struggled to tell them apart from video recorded by humans behind a camera.

Sora’s public launch in December 2024 displayed a leap in quality that was a stark contrast to the grainy, glitchy AI videos from just two years prior. What once required professional equipment and big budgets could now be generated by anyone at home with just a text prompt.

Today, with approximately 34 million new AI images generated daily and more than 15 billion since 2022, the boundary between real and artificial is becoming more and more difficult to distinguish. Alongside this change, the question increasingly shifts from “was this made by AI?” to “can I trust the source that shared it?”

Seventy-eight percent of Americans now admit it’s nearly impossible to separate real from machine-generated content online, and three-quarters say they “trust the internet less than ever.” As generative AI becomes increasingly embedded in everything from product reviews to news articles, trust has shifted from being a competitive advantage to a non-negotiable foundation for brands and marketers.

Digital life at an inflection point

The appetite for authenticity has never been greater. Getty Images’ global “Building Trust in the Age of AI” study found that 98 percent of consumers see authentic visuals as critical for trust, 87 percent actively look for them, and nearly 90 percent want brands to disclose when an image is AI-generated.

Yet, only one in four people say they understand how AI is used, with 31 percent of consumers saying they would abandon a product entirely if they couldn’t switch off its AI features.

This paradox, where technology enables unprecedented creativity and scale but simultaneously erodes consumer trust, presents marketers with a new reality. The same tools that allow for hyper-personalization and efficiency also risk undermining the very relationships brands are built on.

From “is this AI?” to “do I trust the source?”

As generative AI reaches near-perfect outputs, users have started to understand that trying to determine whether content is AI-generated is besides the point. Instead, they’re turning to relationships asking if they can trust the brand or individual behind the content.

“Simply put, people’s decisions whether to trust an image will be based on their perception of the person or organization that shared it,” writes futurist Henry Coutinho-Mason on The Future Normal. “As AI-generated images get completely indistinguishable from ‘real’ ones, creators will have to be transparent in order to keep their audience’s trust.”

Increasingly, one of the filters for engagement and data-sharing is trust in the source sharing the content, not the content’s origin. Research has found that 62 percent of consumers would have increased trust for brands that are transparent about AI usage.

For marketers, this means shifting from being ambiguous about AI to taking a firm stance on using it to build unshakeable trust.

Between the writing and the publishing of this article, Google launched its new text-to-video generator, Veo3. It represents a significant improvement in output quality compared to other A video generators from just a couple months prior.

Case in point: the above/below is a short-film 100% generated by the new model, created by Hashem Al-Ghaili. It’s named Afterlife: The Unseen Lives of AI Actors Between Prompts, and it imagines what might happen to AI in the liminal space after humans are done prompting.

Marketers as architects of trust

In this new landscape, marketers are caught in the center of a trust crisis they didn’t create but can help to solve.

Often responsible for decisions that influence brand communication and customer relationships, marketers can act as the architects of trust, crafting the narratives, choosing the right channels, and setting the tone for how brands engage with their audiences in a way that supports transparent and ethical practices.

In a context where AI can replicate products, services, and even customer experiences with increasing sophistication, the human relationship becomes the one thing that can’t be easily copied.

Brands that proactively address AI concerns and commit to transparent practices can capture market share from competitors who choose to stay ambiguous or defensive about their AI use.

The cost of inaction is steep, and is accelerating as quickly as AI technology is being developed. Brands that fail to establish clear guidelines for AI use and communication strategies risk reputational damage. A single viral incident of undisclosed AI use, biased algorithmic decisions, or data mishandling can destroy trust that took years to build. Moreover, brands that choose to stay silent about AI are allowing competitors to define the conversation, and potentially industry standards, without them.

The digital literacy gap: challenge and opportunity

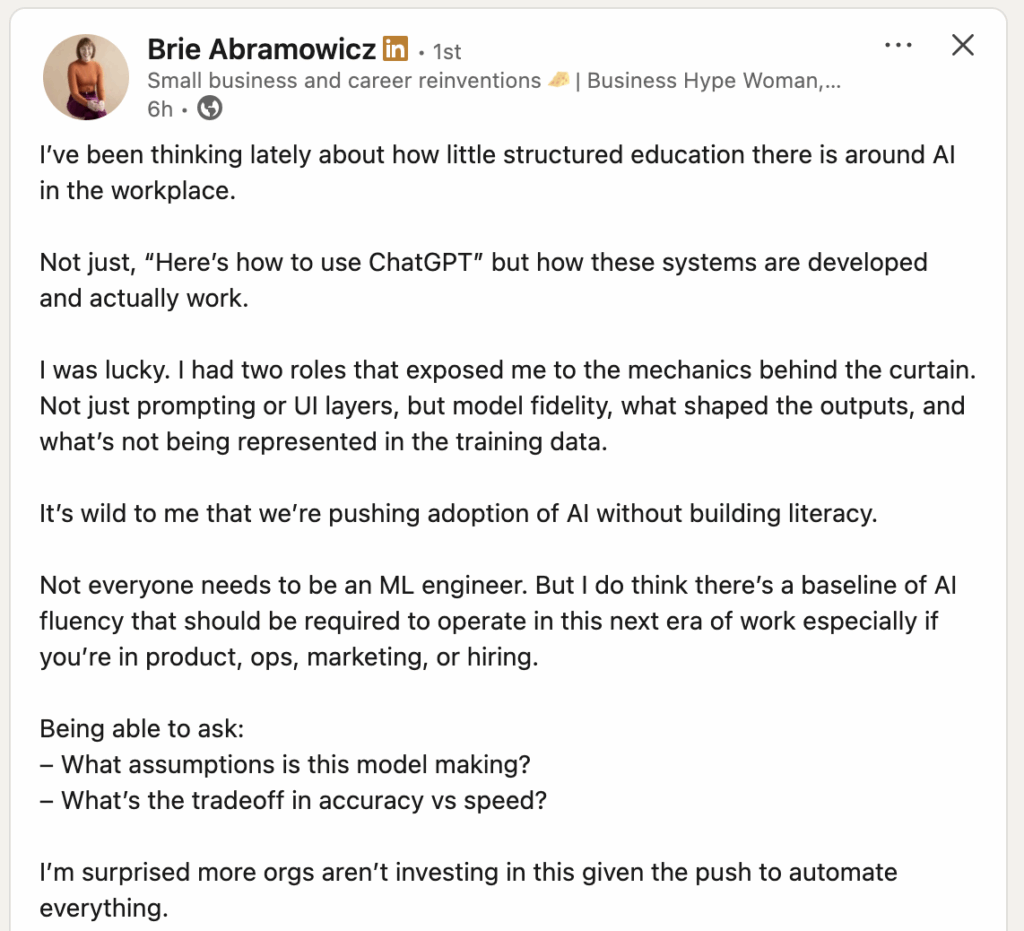

Despite the proliferation of AI tools, there remains a significant gap between adoption and understanding. According to the Marketing AI Institute, 67 percent of marketers cite lack of training as their top barrier to AI adoption, and 47 percent of organizations offer no AI-focused education for their teams.

On the consumer side, Pew Research shows that 53 percent of Americans don’t know how large language models work, and just 42 percent can define a deepfake.

The founder of one of the most prominent AI companies admits there is a gap in understanding how AI really works, even in the industry itself. Dario Amodei of Anthropic, responsible for the LLM Claude, shared on his blog, “people outside the field are often surprised and alarmed to learn that we do not understand how our own AI creations work.”

This information asymmetry hasn’t slowed AI’s adoption, with many companies now mandating its use. But with widespread adoption comes increased risk. Users often don’t realize that AI models can reinforce existing biases in hiring, lending, or content recommendations; perpetuating discrimination without the user’s awareness. Many also don’t understand that AI can ‘hallucinate’ — creating confident-sounding but entirely false information that gets accepted at face value.

Meanwhile, those who avoid AI altogether risk missing out on one of the most transformative technologies of our time, potentially falling behind in several areas from productivity to creative development.

When it comes to AI and its continuous changes and developments, it is difficult to speak definitively. Still, it is already clear that digital literacy has become a crucial strategic advantage. For marketers, this means not only upskilling themselves but also guiding customers through the evolving AI landscape.

Product Marketing Strategist Brie Abramowicz highlights a critical gap: AI being rapidly adopted in the workplace without a matching investment in literacy. Her perspective reinforces the idea that trust begins with understanding of not only outputs, but of the systems behind them.

Learning in public and navigating uncertainty

The way to build unshakeable trust isn’t to have all the answers, but to be willing to learn transparently alongside your audiences. Even industry leaders are discovering that admitting uncertainty and course-correcting publicly can actually strengthen consumer confidence.

While the reward of trailblazing can be high, being at the forefront can invite controversy. Companies that have rolled out AI mandates without a clear plan to inform users and support staff through the process risk facing backlash.

That was the case after language-learning app Duolingo’s CEO Luis von Ahn announced the company was going “AI first.” The announcement celebrated how much more quickly the company was now able to develop new language courses while also phasing out work with contractors and freezing new hires unless the job couldn’t be handled by AI.

The announcement was followed by intense criticism from both employees and app users.

Von Ahn took to LinkedIn to clarify his previous stance: “One of the most important things leaders can do is provide clarity. When I released my AI memo a few weeks ago, I didn’t do that well.”

He goes into more detail and reassures staff and language learners that Duolingo will stay AI-first, but won’t leave staff alone on their learning journey. He also shared some ambivalence: “AI is creating uncertainty for all of us, and we can respond to this with fear or curiosity. By understanding the capabilities and limitations of AI now, we can stay ahead of it.”

If anything, the Duolingo case reinforces how trust is at the core of lasting-relationships with users. The point is not to have every detail figured out, but rather to be transparent and proactive in communication, and include your users in the journey.

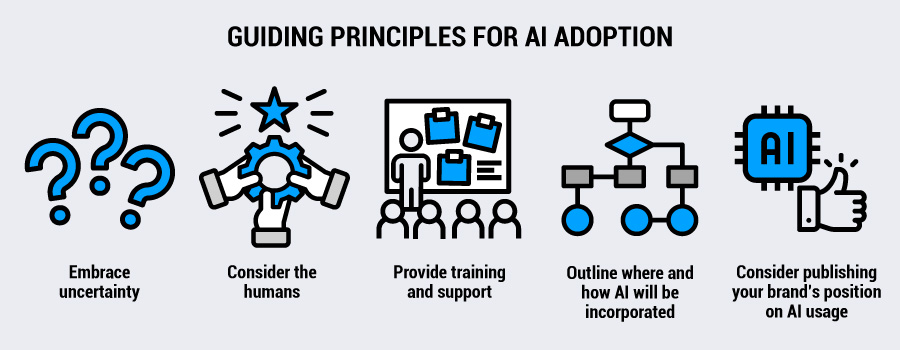

Here are a few guiding principles to consider:

- Embrace uncertainty: Stay curious and open to learning and adapting

- Consider the humans: Job uncertainty is a real concern, so address it and be clear about what is going to happen with workforce management

- Provide training and support: Empower employees to use the new tech with confidence

- Outline where and how AI will be incorporated: And how that will interact with and affect the end user

- Consider publishing your brand’s position on AI usage: both as a form of accountability and to inspire others in your space

The New York Times took the lead and made their principles for generative AI in the newsroom open to the public. It contains guidelines for journalists on how to use AI in reporting, and when to steer clear. It concludes by saying: “Readers must be able to trust that any information presented to them is factually accurate.”

The news outlet also has a dedicated AI initiatives department, where a group of journalists with experience in machine learning explores ways to leverage AI for better journalism practices in their newsroom.

Similarly, the British Channel 4 has also made its AI principles public, outlining their core beliefs around AI and how they are implementing the technology. Among the principles, transparency and integrity are at the center.

The document states: “Trust and truthfulness matter. We want to combat misinformation, so we will actively avoid using AI systems that could lead to the spread of misinformation or disinformation.”

These examples show that transparency frameworks are still being created and tested. The brands that will truly differentiate themselves are those that go beyond disclosure to make trust-building their competitive advantage.

Beyond transparency: the marketer’s edge

While most brands are still figuring out basic AI disclosure, forward-thinking marketers are turning transparency into a competitive weapon. A few key points to consider for going beyond compliance to build unshakeable trust:

Consider users AI co-creators

Instead of just disclosing AI use, invite customers to participate in shaping how you use it. Let them vote on AI features, test new capabilities, or even help train your models through feedback. This transforms the dynamic from the expected “brand uses AI on customer” to a more exciting “brand and customer collaborate with AI,” changing the trust dynamic

See AI mistakes as trust builders

Assume that you won’t always get it right, and instead of hiding AI failures or mistakes, document and share these instances transparently.

When your AI chatbot gives a weird response or your generated image has flaws, turn it into content that acknowledges human oversight and commitment to improvement. This approach builds more trust than pretending AI is perfect.

Create trust audits for AI touchpoints

Rate each touchpoint on a “trust-to-risk scale” and prioritize transparency efforts where the stakes are highest. For example, a retail brand might rate AI-generated product reviews as high-risk (customers rely on authenticity for purchase decisions) while AI-optimized email subject lines rank as low-risk.

The high risk touchpoints get prominent disclosure and human oversight, while lower-risk applications might only require internal documentation. This approach can signal trust risks before they become reputation damage.

Trust as the compass in an AI-rich landscape

We stand at a pivotal moment where the question “is this AI?” is quickly becoming irrelevant, replaced by the more important “can I trust who’s telling me this?” This shift is more than just a technological detail, it’s a fundamental rewiring of how brands and consumers relate to each other.

The playbook is still being written, but the principles are clear: transparency isn’t just ethical, it’s an edge. Admitting to not having all the answers isn’t a weakness, it’s differentiation. In a world where AI can replicate almost anything, the one thing it can’t, is human trust.

The digital world has transformed attention into an abundant yet devalued resource.