In November 2024, Australia’s Parliament passed the Online Safety Amendment (Social Media Minimum Age) Bill 2024. This new law has grabbed international attention as a serious attempt to address harm to children as the result of using social media platforms. We look at what the Amendment’s scope, social media platforms that are affected, how companies need to comply, and what potential penalties are.

What is the Australian Online Safety Amendment?

The Online Safety Amendment amends the Online Safety Act 2021. It’s designed to create specific requirements for children’s access to social media platforms, the most notable being a ban for children in Australia under age 16 from holding accounts on these platforms. Companies operating affected social media platforms will be expected to introduce and enforce age gating to prevent children from using the platforms.

It’s common under a number of international laws to restrict the access to social platforms for children under age 13, and age verification is required, though often circumvented. 13 is the same age at which people generally become categorized as adults under many data privacy laws — or they enter a mid-range including ages 13 to 16. Typically by that age, where consent is required, it now must be obtained directly from the individuals, rather than from a parent or guardian.

The Online Safety Amendment dovetails with broader data privacy law in that it includes specific privacy protections, including limiting children’s use of the platforms, and retention of personal data that’s collected on them. Noncompliance penalties are also substantial.

Why was the Australian Online Safety Amendment introduced?

The bill was introduced to address ongoing concerns about the impacts of children’s access to social media platforms. The impacts of exposure to social media at a critical development are not yet fully understood, so the full harm potential is not yet known. Studies have already shown negative impacts on children’s and teens’ mental health, and there have been criminal cases involving predators accessing and manipulating children through social platforms.

Children’s activities online also aren’t always well monitored or carefully limited, and the prevalence of Wi-Fi and mobile devices makes access to social platforms ever easier.

What has the reaction been to the Australian social media ban for children?

The law has been controversial in some circles. Not unexpectedly, there have been mixed reviews, including whether the law goes too far, not far enough, or misses the mark in its intent. Critics make a variety of claims, including:

- the law may introduce new risks and cause more harm

- the law’s scope and exclusions are insufficient or incorrectly targeted

- children’s autonomy is compromised

- children are digitally savvy and will easily find ways around the ban

- opportunities for learning and growth will be stifled

- significant burdens will be levied on social media platforms to create and manage age restrictions

Perhaps ironically, it has been noted that by requiring social media platforms to collect and use potentially sensitive personal information from children to verify age and enforce the law’s requirements, greater risk to their privacy and safety, as well as to privacy compliance with other laws, may result.

To accompany the new law and its requirements, Australia’s eSafety Commissioner has provided content and services for educators, parents, and others, targeting the topic of children’s online safety, and how to support children’s safe activities online. This touches on an important point: that it will take a variety of measures, from legal to educational to parental, to safely manage children’s use of digital social spaces.

Who has to comply with the Australian Social Media Minimum Age law?

Certain social media platforms, noted as “age-restricted social media platforms” are required to self-regulate under the law. They must take “reasonable steps” to prevent Australian children under age 16 (“age-restricted users”) from creating or using accounts or other profiles where potential harms are considered likely to occur.

Children under the age of 13 are required to be explicitly excluded in the platforms’ terms of service to remove any ambiguity about at what age it is appropriate to start using social media.

The eSafety Commissioner will be responsible for writing guidelines on the “reasonable steps” that the affected age-restricted social media platforms are required to take. The new law does not include in its text specifics like what age estimation or verification technology may be used or what the reasonable steps guidelines will include.

The law’s text does not explicitly reference any current social platforms, as popular ones tend to change over time. However, in the explanatory memorandum, the government noted that the law is intended to apply to companies like Snapchat and Facebook (parent company Meta), rather than companies offering services like messaging, online gaming, or services primarily aimed at education or health support, with Google Classroom or YouTube given as examples.

However, such distinctions can be tricky, as a number of social media platforms that would likely be included do also enable functions like messaging and gaming, for example. There are legislative rules that can set out additional coverage conditions or specific electronic services that the law includes or exempts.

Businesses have one year from the passage of the Social Media Minimum Age bill to comply, so enforcement will likely begin as of or after November 2025.

What measures do companies need to take to comply with the Online Safety Amendment?

Social media platforms that meet the Amendment’s inclusion requirements will need to implement or bolster functions on their platforms to verify user age and prevent children under 16 from creating or maintaining accounts. Presumably, the platforms will also need to purge existing accounts belonging to children. The law does not specify what technology should be used or how age should be verified, as this changes over time.

The definition of a user on these social media platforms involves being an account holder who is logged in, so children who are not logged in to accounts can continue to access contents or products on these platforms if available. As platforms are not able to access nearly as much user data from those who are not logged in, many platforms significantly limit functionality to individuals who are not logged-in account holders.

Other existing privacy law requirements dovetail with the Online Safety Amendment’s requirements, particularly the Privacy Act 1988. For example, covered platforms can only use collected personal data for the purpose of compliance unless explicitly permitted under the Privacy Act or user informed and voluntary user consent is obtained. This information must then be disclosed or destroyed after its use for that specific purpose.

What other legal actions address children’s use of social media?

Around the world there are a number of laws that address children’s privacy and online activities, though they are more broad and don’t explicitly target social media use — some of them predate the relevant platforms’ existence.

Additionally, broader regional data privacy laws, like the Privacy Act 1988, are relevant, and they all include specific and stringent requirements for accessing and handling children’s data, as well as consent requirements.

In the United Kingdom there is the U.K. Online Safety Act, which has a section dedicated to “Age-appropriate experiences for children online.”

In the EU, there is the Digital Services Act (DSA), which focuses on a wide range of digital intermediary services. It’s aimed at “very large online platforms”, aka VLOPs, and very large online search engines, or VLOSEs. The list of designated VLOPs does include social media platforms. The DSA imposes strict requirements to address risks that their operation poses to consumers, as well as aiming to protect and enhance individuals rights, particularly relating to data privacy, including those of minors.

In the United States, the Children’s Online Privacy Protection Act (COPPA) has been in place since 2000, though revised several times by the Federal Trade Commission, and aims to protect children under age 13 and their personal information. COPPA is more broad, however, and not focused only on social media platforms, though they are covered under its requirements.

There are efforts to introduce substantial legislative updates, referred to as “COPPA 2.0”, which would further modernize the law, including raising the compliance age from 13 to 16 to protect more children. It would also include more stringent requirements for operators of social platforms if there are reasonable expectations that children under 16 use the platform.

At present, compliance is only required if there are known children under 13 using the services. Insisting that they don’t know for sure if children use the platforms is a common excuse to avoid compliance requirements, though children’s presence on social media platforms is widely known.

A number of social media platforms have been charged with COPPA violations, including Epic Games, which makes the popular video game Fortnite, and the video app TikTok (parent company ByteDance). Interestingly, in early November 2024, the Canadian government ordered that TikTok’s Canadian operations be shut down due to security risks, which the company is appealing. The order will not likely affect consumer use of the app, however.

What are the penalties for violating the Australian Online Safety Amendment?

The Privacy Act applies to compliance and penalties as well, as violations of the Amendment will be considered “an interference with the privacy of the individual” for the purposes of the Privacy Act. The Information Commissioner will manage enforcement of the Social Media Minimum Age law, and concompliance fines will be up to 30,000 “penalty units”, which as of the end of 2024 equals AUD 9.5 million.

A penalty unit is a way to standardize and calculate fines, accomplished by multiplying the current value of a single penalty unit — which is determined by the Information Commissioner and regularly updated to reflect inflation — by the number of penalty units assigned to the offence.

The Information Commissioner will also hold additional powers for information gathering and the ability to notify a social media platform and publicly release information if it’s determined the platform has violated the law.

Independent review of the law is required within two years of it coming into effect, so by November 2026.

The future of online data privacy on social media platforms

Australia’s Online Safety Amendment has significant implications for data privacy and children’s autonomy as governments, educators, and parents — in that country and around the world — struggle to balance children’s use of social media to enable connection, education, and entertainment while keeping them safe from misinformation and abuse.

The Social Media Minimum Age law places strict requirements on relevant platforms to implement age verification, prevent and remove account-holding by children, and also ensure the security of sensitive information required to do these verifications. The penalties for failing to adequately achieve this ban are steep, and compliance won’t be easy given how fast technologies change and how savvy many children are online. The amendment may well require its own amendments in a relatively short period of time.

There will be a lot of attention over the next two years on how this law rolls out and what works and doesn’t to fulfill requirements. The required report after the first two years should also prove illuminating, and provide guidance for other countries considering similar measures, or looking to update existing data privacy legislation to better protect children.

Companies implementing best practices for data privacy compliance and protection of users of websites, apps, social media platforms, and more should ensure they are well versed in relevant (and overlapping) laws, including specific requirements for special groups like children.

They should consult qualified legal counsel about obligations, and IT specialists about the latest technologies to meet their needs. They should also invest in well integrated tools, like a consent management platform, to collect valid consent for data use where relevant and inform users about data handling and their rights.

2024 saw the number of new data privacy regulations continue to grow, especially in the United States. It also saw the effects of laws passed earlier as they came into force and enforcement began, like with the Digital Markets Act (DMA). But perhaps the biggest impact of data privacy in 2024 was how quickly and deeply it’s become embedded in business operations.

Companies that may not have paid a lot of attention to regulations have rapidly changed course as data privacy requirements have been handed down by companies like Google and Facebook. The idea of “noncompliance” stopped being complicated yet nebulous and became “your advertising revenue is at risk.”

We expect this trend of data privacy becoming a core part of doing business to continue to grow through 2025 and beyond. More of the DMA’s gatekeepers and other companies are likely to ramp up data privacy and consent requirements throughout their platform ecosystems and require compliance from their millions of partners and customers. Let’s not forget that data privacy demands from the public continue to grow as well.

We also expect to see more laws that include or dovetail with data privacy as they regulate other areas of technology and its effect on business and society. AI is the biggest one that comes to mind here, particularly with the EU AI Act having been adopted in March 2024. Similarly, data privacy in marketing will continue to influence initiatives across operations and digital channels. Stay tuned to Usercentrics for more about harnessing Privacy-Led Marketing.

Let’s peer into the future and look at how the data privacy landscape is likely to continue to evolve in the coming year, where the best opportunities for your company may lie, and what challenges you should plan for now.

2025 in global data privacy regulation

For the last several years, change has been the only constant in data privacy regulation around the world. Gartner predicted that 75 percent of the world’s population would be protected by data privacy law by the end of 2024. Were they right?

According to the International Association of Privacy Professionals (IAPP), as of March 2024, data privacy coverage was already close to 80 percent. So the prediction had been exceeded even before we were halfway through the year.

Data privacy regulation in the United States

The United States passed a record number of state-level data privacy regulations in 2024, with Kentucky, Maine, Maryland, Minnesota, Nebraska, New Hampshire, New Jersey, Rhode Island, and Vermont coming on board to bring the number of state-level US data privacy laws to 21. By contrast, six states passed laws in 2023, which was a record number to date then.

The privacy laws in Florida, Montana, Oregon, and Texas went into effect in 2024. The privacy laws in Delaware, Iowa, Maryland, Minnesota, Nebraska, New Hampshire, New Jersey, and Tennessee go into effect in 2025.

Since the majority of US states still don’t have data privacy regulations, more of these laws are likely to be proposed, debated, and (at least sometimes) passed. It will be interesting to see if certain states that have wrangled with privacy legislation repeatedly, like Washington, will make further progress in that direction.

April 2024 saw the release of a discussion draft of the American Privacy Rights Act (APRA), the latest federal legislation in the US to address data privacy. It made some advances during the year, with new sections added addressing children’s data privacy (“COPPA 2.0”), privacy by design, obligations for data brokers, and other statutes. However, the legislation has not yet been passed, and with the coming change in government in January 2025, the future of APRA is unclear.

Data privacy regulation in Europe

The European Union continues to be at the forefront of data privacy regulation and working to keep large tech platforms in check. Two recent regulations, particularly, will continue to shape the tech landscape for some time.

The Digital Markets Act (DMA) and its evolution

With the Digital Markets Act in effect, the first six designated gatekeepers (Alphabet, Amazon, Apple, ByteDance, Meta, and Microsoft) had to comply as of March 2024. Booking.com was designated in May, and had to comply by November.

There is a good chance that additional gatekeepers will be designated in 2025, and that some current ones that have been dragging their metaphorical feet will start to accept the DMA’s requirements. We can expect to see the gatekeepers roll out new policies and requirements for their millions of customers in 2025 to help ensure privacy compliance across their platforms’ ecosystems.

More stringent consent requirements are also being accompanied by expanded consumer rights, including functions like data portability, which will further enhance competitive pressures on companies to be transparent, privacy-compliant, and price competitive while delivering great customer experiences.

The AI Act and its implementation

While the entirety of the AI Act will not be in effect until 2026, some key sections are already in effect in 2024, or coming shortly, so we can expect to see their influence. These include the ban on prohibited AI systems in EU countries and the rules for general purpose AI systems.

Given that training large language models (LLMs) requires an almost endless supply of data, and organizations aren’t always up front about getting consent for it, it’s safe to say that there will continue to be clashes over the technology’s needs and data privacy rights.

Data privacy around the world

There was plenty in the news involving data privacy around the world in 2024, and the laws and lawsuits reported on will continue to make headlines and shape the future of privacy in 2025.

AI, privacy, and consent

There have been complaints reported and lawsuits filed throughout 2024 regarding data scraping and processing without consent. Canadian news publishers and the Canadian Legal Information Institute most recently joined the fray. We don’t expect these issues to be resolved any time soon, though there should be some influential case law resulting once these cases have made their way through the courts. (Unlikely that all of them will be resolved by settlements.) The litigation may have significant implications for the future of these AI companies as well, and not just for their products.

Social media and data privacy

As noted, laws that dovetail with data privacy are also becoming increasingly notable. One recent interesting development is Australia passing a ban on social media for children under 16. In addition to mental health concerns, some social media platforms — including portfolio companies of Alphabet, Meta, and TikTok parent company ByteDance — have run afoul of data privacy regulators, with penalties for collecting children’s data without consent, among other issues. It will be very interesting to see how this ban rolls out, how it’s enforced, and if it serves as inspiration elsewhere for comparable legislation.

The latest generation of data privacy laws and regulatory updates

The UK adopted its own customized version of the General Data Protection Regulation (GDPR), the UK GDPR, upon leaving the EU. It has recently published draft legislation for the UK Data (Use and Access) Bill, which is meant to further modernize the UK GDPR and reform the way data is used to benefit the economy. We will see if the law does get passed and what its practical effects may be.

Further to recent laws and updates for which we are likely to see the effects in 2025, in September 2024, Vietnam issued the first draft of its Personal Data Protection Law (PDPL) for public consultation.

Malaysia passed significant updates to its Personal Data Protection Act (PDPA) via the Personal Data Protection (Amendment) Act. The PDPA was first passed in 2010, so it was due for updates, and companies doing business in the country can expect the new guidelines to be enforced.

Also, the two-year grace period on Law No. 27 in Indonesia’s Personal Data Protection law (PDP Law) ended in October 2024, so we can expect enforcement to ramp up there as well.

Asia already has considerable coverage with data privacy regulation, as countries like China, Japan, South Korea, and India all have privacy laws in effect as well.

The future of privacy compliance and consent and preference management

Just as the regulation of data privacy is reaching an inflection point of maturity and becoming mainstream, so are solutions for privacy compliance, consent, and preference management.

Integrated solutions for compliance requirements and user experience

Companies that are embracing Privacy-Led Marketing in their growth strategy want solutions that can meet several needs, support growth, and seamlessly integrate into their martech stack. Simply offering a cookie compliance solution will no longer be enough.

Managing data privacy will require solutions that enable companies to obtain valid consent — for requirements across international jurisdictions — and signal it to ad platforms and other important tools and services. In addition to consent, companies need to centralize privacy user experience to provide customers with clear ways to express their preferences and set permissions in a way that respects privacy and enables organizations to deliver great experiences with customized communications, offers, and more.

Customer-centric data strategies

It may take some time for third-party cookie use and third-party data to go away entirely, but zero- and first-party data is the future, along with making customers so happy they never want to leave your company. Rather than trying to collect every bit of data possible and preventing them from taking their business elsewhere.

We may see more strategies like Meta’s “pay or ok” attempt where users can pay a subscription fee to avoid having their personal data used for personalized ads, but given EU regulators’ response to the scheme, similar tactics are likely to have an uphill battle, at least in the EU.

Delivering peace of mind while companies to stay focused on their core business

SMBs, particularly, also have a lot to do with limited resources, in addition to focusing on growing their core business. We can expect to see further deep integration of privacy compliance tools and services. These solutions will automate not only obtaining and signaling consent to third-party services, but also notifying users about data processing services in use and data handling, e.g. via the privacy policy, responding to data subject access requests (DSAR), and other functions.

Further to international compliance requirements, as companies grow they are going to need data privacy solutions that scale with them, and enable them to easily handle the complexities of complying with the requirements of multiple privacy laws and other relevant international and/or industry-specific polices and frameworks.

Frameworks like the IAB’s Global Privacy Platform (GPP) are one way of achieving this, enabling organizations to select relevant regional privacy signals to include depending on their business needs.

Usercentrics in 2025

Our keyword to encapsulate data privacy for 2024 was “acceleration”. For 2025 it’s “maturity.” Data privacy laws and other regulations that include data privacy (like AI). Companies’ needs for solutions that enable multi-jurisdictional compliance and data management. The widespread embrace of data privacy as a key part of doing business, and strategizing Privacy-Led Marketing for sustainable growth and better customer relationships. The financial and operational risks of noncompliance moving beyond regulatory penalties to revenues from digital advertising, customer retention, and beyond.

The Usercentrics team is on it. We’ll continue to bring you easy to use, flexible, reliable solutions to manage consent, user preferences, and permissions, and enable you to maintain privacy compliance and be transparent with your audience as your company grows. With world-class support at every step, of course. Plus we have a few other things up our sleeves. (Like this.) Stay tuned! Here’s to the Privacy-Led Marketing era. We can’t wait to help your company thrive.

The Video Privacy Protection Act (VPPA) is a federal privacy law in the United States designed to protect individuals’ privacy regarding their video rental and viewing histories. The VPPA limits the unauthorized sharing of video rental and purchase records. It was passed in 1988 after the public disclosure of Supreme Court nominee Robert Bork’s video rental records raised concerns about the lack of safeguards for personal information.

At the time of the Act’s enactment, video viewing was an offline activity. People would visit rental stores, borrow a tape, and return it after watching. Today, streaming services and social media platforms mean that watching videos is a largely digital activity. In 2023, global revenue from online video streaming reached an estimated USD 288 billion, with the US holding the largest share of that market.

Still, the VPPA has remained largely unchanged since its enactment, apart from a 2013 amendment. However, recent legal challenges to digital video data collection have led courts to reinterpret how the law applies to today’s video viewing habits.

In this article, we’ll examine what the VPPA law means for video platforms, the legal challenges associated with the law, and what companies can do to enable compliance while respecting users’ privacy.

Scope of the Video Privacy Protection Act (VPPA)

The primary purpose of the Video Privacy Protection Act (VPPA) is to prevent the unauthorized disclosure of personally identifiable information (PII) related to video rentals or purchases. PII under the law “includes information which identifies a person as having requested or obtained specific video materials or services from a video tape service provider.”

The law applies to video tape service providers, which are entities involved in the rental, sale, or delivery of prerecorded video materials. Courts have interpreted this definition to include video streaming platforms like Hulu and Netflix, which have widely replaced physical video tape service providers.

The VPPA protects the personal information of consumers. The law defines consumers as “any renter, purchaser, or subscriber of goods or services from a video tape service provider.”

Video tape service providers are prohibited from knowingly disclosing PII linking a consumer to specific video materials, except in the following cases:

- direct disclosure to the consumer

- to a third party with informed, written consent provided by the consumer

- for legal purposes, such as in response to a valid warrant, subpoena, or court order

- limited marketing disclosures, but only if:

- consumers are given a clear opportunity to opt out, and

- the shared data includes only names and addresses and not specific video titles, unless it is for direct marketing to the customer

- as part of standard business operations, such as processing payments

- under a court order, if a court determines the information is necessary and cannot be met through other means, and the consumer is given the opportunity to contest the claim

The 2013 amendment expanded the conditions for obtaining consent, including through electronic means using the Internet. This consent must:

- be distinct and separate from other legal or financial agreements

- let consumers provide consent either at the time of disclosure or in advance for up to two years, with the option to revoke it sooner

- offer a clear and conspicuous way for consumers to withdraw their consent at any time, whether for specific instances or entirely

Tracking technologies and Video Privacy Protection Act (VPPA) claims

Tracking technologies like pixels are central to many claims alleging violations of the VPPA. Pixels are small pieces of code embedded on websites to monitor user activities, including interactions with online video content. These technologies can collect and transmit data, such as the titles of videos someone viewed, along with other information that may identify individuals. This combination of data may meet the VPPA’s definition of personally identifiable information (PII).

VPPA claims often arise when companies use tracking pixels on websites with video content and transmit information about users’ video viewing activity to third parties without requesting affirmative consent. Courts have debated what constitutes a knowing disclosure under the VPPA, but installing tracking pixels that collect and share video data has been found sufficient to potentially establish knowledge in some cases.

Lawsuits under the Video Privacy Protection Act (VPPA)

Many legal claims under the VPPA focus on one or more of three critical questions:

- Does the party broadcasting videos qualify as a video tape service provider?

- Is the individual claiming their rights were violated considered a consumer?

- Does the disclosed information qualify as PII?

Below, we’ll look at how courts have considered these questions and interpreted the law in the context of digital video consumption.

Does the party broadcasting video qualify as a video tape service provider?

Who is considered a video tape service provider under the law may depend on multiple factors. Courts have established that online streaming services qualify, but some rulings have considered other factors, which we’ll outline below, to decide whether a business meets the law’s definition.

Live streaming

The VPPA law defines a video tape service provider as a person engaged in the business of “prerecorded video cassette tapes or similar audiovisual materials.” In 2022, a court ruled that companies do not qualify as video tape service providers for any live video broadcasts, as live streaming does not involve prerecorded content.

However, if a company streams prerecorded content, it may qualify as a video tape service provider in relevant claims.

“Similar audio visual materials”

The definition of a video tape service provider in the digital age includes more than just video platforms that broadcast movies and TV shows. In a 2023 case, a court ruled that a gaming and entertainment website offering prerecorded streaming video content fell within the scope of the VPPA definition of a video tape service provider.

Focus of work

Another 2023 ruling found that the VPPA does not apply to every company that happens to deliver audiovisual materials “ancillary to its business.” Under this decision, a video tape service provider’s primary business must involve providing audiovisual materials. Businesses using video content only as part of their marketing strategy would not qualify as a video tape service provider under this reading of the law.

Is the individual claiming rights violations considered a consumer?

Online video services frequently operate on a subscription-based business model. Many legal challenges under the VPPA focus on whether an individual qualifies as a “subscriber of goods and services from a video tape service provider.”

Type of service subscribed to

Courts have varied in their opinions on whether being a consumer depends on subscribing to videos specifically. In a 2023 ruling, a court held that subscribing to a newsletter that encourages recipients to view videos, but is not a condition to accessing them, does not qualify an individual as a subscriber of video services under the VPPA.

By contrast, a 2024 ruling took a broader approach, finding that the term “subscriber of goods and services” is not limited to audiovisual goods or services. The Second Circuit Federal Court of Appeal determined that subscribing to an online newsletter provided by a video tape service provider qualifies an individual as a consumer. This decision expanded the definition to recognize individuals who subscribe to any service offered by a video tape service provider as consumers.

Payment

Courts have generally agreed that providing payment to a video tape service provider is not necessary for an individual to be considered a subscriber. However, other factors play a role in establishing this status.

A 2015 ruling held that being a subscriber requires an “ongoing commitment or relationship.” The court found that merely downloading a free mobile app and watching videos without registering, providing personal information, or signing up for services does not meet this standard.

However, in a 2016 case, the First Circuit Federal Court of Appeal determined that providing personal information to download a free app — such as an Android ID and GPS location — did qualify the individual as a subscriber. Similarly, in the 2024 ruling above, the Second Circuit found that providing an email address, IP address, and device cookies for newsletter access constituted a meaningful exchange of personal information, qualifying the individual as a subscriber.

Does the disclosed information qualify as PII?

Courts have broadly interpreted PII to include traditional identifiers like names, phone numbers, and addresses, as well as digital data that can reasonably identify a person in the context of video consumption.

In the 2016 ruling referenced above, the First Circuit noted that “[m]any types of information other than a name can easily identify a person.” The court held that GPS coordinates and device identifier information can be linked to a specific person, and therefore qualified as PII under the VPPA.

Just two months later, the Third Circuit Court of Appeal ruled more narrowly, stating that the law’s prohibition on disclosing PII applies only to information that would enable an ordinary person to identify a specific individual’s video-watching behavior. The Third Circuit held that digital identifiers like IP addresses, browser fingerprints, and unique device IDs do not qualify as PII because, on their own, they are not enough for an ordinary person to identify an individual.

These conflicting rulings highlight the ongoing debate about what constitutes PII, especially as digital technologies continue to evolve.

Consumers’ rights under the Video Privacy Protection Act (VPPA)

Although not explicitly framed as consumer rights under the law, the VPPA does grant consumers several rights to protect their information.

- Protection against unauthorized disclosure: Consumers’ PII related to video rentals, purchases, or viewing history cannot be disclosed without consent or other valid legal basis.

- Right to consent: Consumers must provide informed, written consent before a video tape service provider can disclose their PII. This consent must be distinct and separate from other agreements and can be given for a set period (up to two years) or revoked at any time.

- Right to opt out: Consumers must be given a clear and conspicuous opportunity to opt out of the disclosure of their PII.

- Right to notice in legal proceedings: If PII is to be disclosed under a court order, consumers must be notified of the proceeding and given an opportunity to appear and contest the disclosure.

- Right to private action: Consumers can file civil proceedings against video tape service providers for violations of the VPPA.

Penalties under the Video Privacy Protection Act (VPPA)

The VPPA law allows individuals affected by violations to file civil proceedings. Remedies available under the law include damages up to USD 2,500 per violation.

Courts may also award punitive damages to penalize particularly egregious or intentional misconduct. Additionally, plaintiffs can recover reasonable attorneys’ fees and litigation costs. Courts may also grant appropriate preliminary or equitable relief.

The VPPA statute of limitations requires that any lawsuit be filed within two years from the date the violation, or two years from when it was discovered.

Compliance with the Video Privacy Protection Act (VPPA)

Businesses that act as video tape service providers under the VPPA can take several steps to meet their legal obligations.

1. Conduct a data privacy audit

A data privacy audit can help businesses understand what personal data they collect, process, and store, and whether these practices comply with the VPPA. The audit should include assessing the use of tracking technologies like pixels and cookies to confirm whether they are correctly set up and classified.

2. Obtain informed, specific user consent

The VPPA requires businesses to obtain users’ informed, written consent before sharing PII. Implementing a consent management platform (CMP) like Usercentrics CMP can make it easier to collect, manage, and store consent from users.

VPPA compliance also requires businesses to provide clear and easy to find options for consumers to opt out of data sharing, which a CMP can also facilitate. The VPPA amendment outlines that consent records should not be stored for more than two years, and businesses must have a process for renewing consent before it expires.

3. Implement transparent communication practices

Businesses should help consumers understand how their data is used so they can make an informed decision about whether to consent to its disclosure. Cookie banners used to obtain consent should contain simple, jargon-free language to explain the purpose of cookies. They should clearly indicate if third-party cookies are used and identify the parties with whom personal information is shared.

Businesses should include a direct link to a detailed privacy policy, both in the cookie banner and in another conspicuous location on their website or mobile app. Privacy policies must explain how PII is collected, used, and shared, along with clear instructions on how consumers can opt out of PII disclosures.

4. Consult qualified legal counsel

Legal experts can help businesses achieve VPPA compliance and offer tailored advice based on specific business operations. Counsel can also help businesses keep up with current litigation to understand how courts are interpreting the VPPA, which is critical as the law continues to face new challenges and evolving definitions.

Usercentrics does not provide legal advice, and information is provided for educational purposes only. We always recommend engaging qualified legal counsel or privacy specialists regarding data privacy and protection issues and operations.

The EU Cyber Resilience Act (CRA) has been in the works for several years, but has now been adopted by EU regulators. It enters into force 10 December 2024, though its provisions will be rolled out over the next several years. We look at what the CRA is, who it affects, and what it means for businesses in EU markets.

What is the Cyber Resilience Act (CRA)?

The EU Cyber Resilience Act aims to bring greater security to software and hardware that includes digital elements, as well as the networks to which these products connect. Focused around cybersecurity and reducing vulnerabilities, the law covers products that can connect to the internet, whether wired or wireless, like laptops, mobile phones, routers, mobile apps, video games, desktop applications, and more.

The CRA enters into force 10 December 2024, though requirements are being rolled out gradually. Organizations have 21 months from the law coming into effect to start meeting reporting obligations, and by late 2027 all remaining provisions will be in effect (36 months from December 2024).

Broader scope of EU cybersecurity initiatives

The CRA is part of the larger EU Cybersecurity Strategy, particularly the Directive on measures for a high common level of cybersecurity across the European Union, known as the NIS2 Directive. The Strategy aims to “build resilience to cyber threats and ensure citizens and businesses benefit from trustworthy digital technologies.” It also aims to address the cross-border nature of cybersecurity threats to help ensure products sold across the EU meet adequate and consistent standards.

With the ever-growing number of connected products in consumers’ lives and used for business operations, the need for security and vigilance in manufacturing and consumer goods is only likely to grow. The law also intends to ensure consumers receive adequate information about the security and vulnerabilities of products they purchase so they can make informed decisions at home and at work.

Who and what does the Cyber Resilience Act apply to?

The CRA applies to manufacturers, retailers, and importers of products — both hardware and software — if they have digital components. This does include consent management platforms.

Under the law, included products will have to comply with specific requirements throughout the full product lifecycle, from the design phase to when they’re in consumers’ hands. Design, development, and production will need to ensure adequate levels of cybersecurity based on risk levels and factors. It’s a bit like the concept of privacy by design, but even more security-focused and codified into law.

How can companies comply with the Cyber Resilience Act?

Companies required to comply will have responsibilities for bringing products to market that do not have any known vulnerabilities that can be exploited, and that are configured in a way that is “secure by default”. Products will also need to bear the CE mark to show compliance.

Additionally, companies will need to implement various other security measures, including:

- control mechanisms like authentication and identity/access management

- high level encryption (both in transit and at rest)

- mechanisms to enable resilience against denial-of-service (DOS) attacks

Handling vulnerabilities under the CRA

There are specific requirements for manufacturers for handling vulnerabilities, including identifying and documenting components the products contain and any vulnerabilities, also creating a software bill of materials that lists top-level dependencies that’s in a common, machine-readable format (where relevant).

Any discovered vulnerabilities will have to be addressed through subsequent security updates that will have to meet a number of requirements:

- delivered without delay

- provided free of charge

- including advisory messages for users, with information like necessary actions

- implementation of a vulnerability disclosure policy

- public disclosure of repaired vulnerabilities, with:

- description of the vulnerabilities

- information to identify the product affected

- severity and impact of the vulnerabilities

- information to help users remediate the vulnerabilities

Reporting requirements under the CRA

In the event of a severe cybersecurity incident or exploited vulnerability, the manufacturer will have to report the issue by electronic notification to the European Union Agency for Cybersecurity and the competent computer security incident response team within 24 hours (a number of factors will be used to determine who makes up this team). Followup notices are also usually required within 72 hours and 14 days. Timely notification of product end users is also required.

Consent requirements under the CRA

The CRA is focused on cybersecurity, so does not focus on the end user or on consent or its management like the GDPR does, for example. However, like data privacy laws, it requires transparency and notification of important information, including reporting to authorities as required, and to end users in the event of a security incident.

Manufacturers’ provision of clear information on cybersecurity measures and potential vulnerabilities in their products will enable informed decision-making by consumers. This is also a goal of data privacy laws like the GDPR.

Additionally, the regulation is quite clear on products’ need for security to prevent unauthorized access and to protect potentially sensitive personal data, also goals of privacy regulations.

Critical products and special requirements under the CRA

Hardware and software products with digital elements face different requirements under the CRA depending on factors like use. For example, some products are considered critical because under the NIS2 Directive essential entities critically rely on them.

Cybersecurity incidents or vulnerability exploitation with these products could seriously disrupt crucial supply chains or networks; pose a risk to safety, security, or health of users; and/or are critical to the cybersecurity of other networks, products, or services. The European Commission will maintain the list of critical products. Examples include “Hardware Devices with Security Boxes” and smartcards.

Products considered critical will have to obtain a European cybersecurity certificate at the required level, e.g. assurance, substantial, etc. in keeping with an accepted European cybersecurity certification scheme where possible. There is also a list of “important” products that will need to meet conformity assessment requirements, though these are not classed as critical. These include VPNs, operating systems, identity management systems, routers, interconnected wearables, and more. The European Commission will also maintain this list.

What are the exclusions to Cyber Resilience Act compliance?

Certain products that are already covered by other product safety regulations are excluded from the scope of the CRA. These include motor vehicles, civil aviation vehicles, medical devices, products for national security or defense, etc. Hardware without digital elements would not be included, nor would products that can’t be connected to the internet or other network, or that can’t be exploited through cyber attack (e.g. it holds no data).

What are the penalties for noncompliance under the Cyber Resilience Act?

Failure to maintain adequate security standards, fix vulnerabilities, notify relevant authorities and parties about security incidents, or otherwise violating the CRA can result in fines up to EUR 15 million or 2.5 percent of global annual turnover for the preceding year, whichever is higher. These penalties are even higher than the first tier of penalties for GDPR violations.

Usercentrics and the Cyber Resilience Act

The CRA will apply to our products by 2027. However, Usercentrics takes security and data protection as seriously as we do valid consent under international privacy laws — today and every day. We are always evaluating our practices, from design to development to implementation and maintenance, and will continue to upgrade our products and systems to keep them, our company, and our partners and customers as safe as possible.

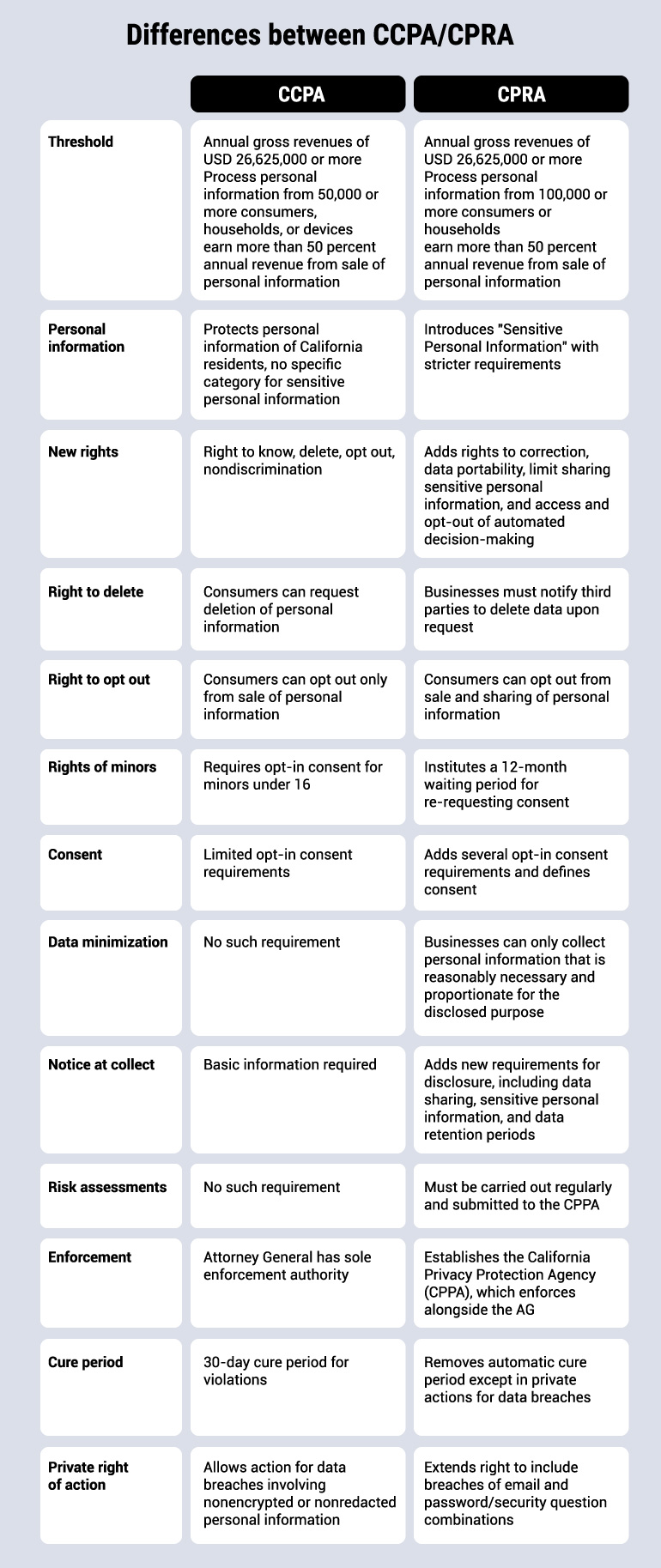

As organizations handle people’s personal data across borders, regulations like the European Union’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA)/California Privacy Rights Act (CPRA) have become central to protecting privacy rights. Both regulations establish rules for when and how organizations can collect, use, and share personal data to give individuals control over their information.

Although the GDPR and the CCPA/CPRA share common goals, their scope, requirements, and enforcement mechanisms vary significantly. Understanding these differences is essential for organizations to avoid penalties and build trust with the people whose data they handle.

We cover who these regulations apply to, their similarities and differences, and how organizations can implement compliance measures effectively.

CCPA vs GDPR: understanding the basics

The GDPR and the CCPA/CPRA are landmark data privacy laws, each setting standards for how personal data is managed and protected. Before going into details about their scope and application, let’s look at what these laws are.

What is the GDPR?

The General Data Protection Regulation (GDPR) governs data collection and processing for individuals located in 27 European Union (EU) member states and the European Economic Area (EEA) countries of Iceland, Liechtenstein, and Norway. It is designed to protect individuals’ privacy rights and establish consistent data protection standards across the EU/EEA. The GDPR applies to organizations that either offer goods or services to or monitor the behavior of individuals within these regions, regardless of where the business is located.

Effective since May 25, 2018, the GDPR has become a global benchmark for data protection, influencing data privacy legislation worldwide.

What is the CCPA?

The California Consumer Privacy Act (CCPA), effective January 1, 2020, is the first comprehensive data privacy law passed in the United States. It establishes a framework for protecting the personal information of California residents and regulates how businesses collect, share, and process this data.

The California Privacy Rights Act (CPRA) amended and expanded the CCPA, increasing consumer protections and introducing stricter obligations for businesses, such as increased transparency and limits on the use of sensitive information. The CPRA also created the California Privacy Protection Agency (CPPA) to enforce privacy laws in the state.

While the CPRA took effect on January 1, 2023, enforcement began in February 2024 following a delay caused by legal challenges.

The CPRA does not fully replace the CCPA, but instead builds on it. Both laws remain in effect and work together to regulate data privacy in California. They are sometimes known as “the California GDPR.”

CCPA vs GDPR: who do the regulations apply to?

The GDPR and the CCPA/CPRA each specify which types of entities are subject to their rules, with notable differences in scope and applicability.

GDPR scope and application

The GDPR applies to any entity — whether a legal or natural person — that processes the personal data of individuals located within the EU/EEA, provided the processing is connected to either:

- offering them goods or services

- monitoring their behavior

Entities based outside the EU are included if they process the personal data of individuals located within the EU/EEA. The GDPR applies to EU organizations regardless of where the processing takes place.

Under the GDPR, entities are classified as either data controllers or data processors. Controllers determine the purposes and means of processing personal data, while processors act on behalf of the controller to process data.

The regulation does not apply to individuals collecting data for purely personal or household purposes. However, if an individual collects or processes personal data of EU residents — for example as a sole proprietor — they must comply with GDPR requirements.

The GDPR is not limited to businesses and applies to nonprofit organizations and government agencies as well.

CCPA/CPRA scope and application

Unlike the GDPR’s broad application, the CCPA/CPRA applies to for-profit businesses that do business in California and meet one of the following thresholds:

- have a gross annual revenue exceeding USD 26,625,000 in the previous calendar year

- buy, sell, or share the personal data of more than 100,000 consumers or households

- earn more than 50 percent of their revenue from the sale of consumers’ personal information

The regulation defines such entities as “businesses” and extends compliance obligations to their service providers, third parties, and contractors through contractual agreements.

Like the GDPR, the CCPA/CPRA has extraterritorial reach. Businesses outside California — even those outside the US — must comply if they process the personal data of California residents and meet at least one of the regulation’s thresholds.

CCPA vs GDPR: who is protected?

The scope of protection under the CCPA/CPRA and the GDPR differs based on individuals’ residency status or location, which reflects the regulations’ distinct approaches to safeguarding individual rights.

Who is protected under the GDPR?

The GDPR protects the rights of any individual who is in the EU/EEA and whose data is processed. They are referred to as “data subjects” under the GDPR.

Who is protected under the CCPA/CPRA?

The CCPA/CPRA applies to individuals who meet California’s legal definition of a “resident.” A California resident is anyone who resides in the state other than for temporary reasons or anyone domiciled in California but who is currently outside the state for temporary reasons.

It does not include people who are in the state for temporary purposes. This definition may be clarified further as case law develops through rulings on alleged violations.

Individuals covered by the CCPA/CPRA are referred to as “consumers.”

CCPA vs GDPR: what data is protected?

Both the GDPR and the CCPA/CPRA regulate the collection and use of individuals’ personal data.

Personal data under the GDPR

The GDPR defines personal data as any information relating to ”an identified or identifiable individual,” or data subject. This includes basic identifiers such as names, addresses, and phone numbers, as well as more indirect identifiers like IP addresses or location data that can be linked to an individual.

The GDPR also imposes stricter obligations on the processing of certain types of data known as “special categories of personal data,” which may reveal specific characteristics and pose greater risk of harm to an individual if misused or abused. The following are considered special categories of personal data under the regulation:

- data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership

- genetic data

- biometric data used for unique identification

- health information

- data related to a person’s sex life or sexual orientation

Personal information under the CCPA/CPRA

The CCPA/CPRA protects personal information, which it defines as “information that identifies, relates to, describes, is reasonably capable of being associated with, or could reasonably be linked, directly or indirectly, with a particular consumer or household.” Examples include names, email addresses, geolocation data, browsing history, and purchase records.

The CPRA introduced a new category called “sensitive personal information,” which has additional protections and obligations for businesses. It includes, among other things:

- Social Security numbers

- driver’s license numbers

- financial account information

- precise geolocation data used to accurately identify a person within a radius of 1850 feet (563 meters)

- racial or ethnic origin

- religious beliefs

- genetic or biometric data

CCPA vs GDPR: when can data be processed?

The GDPR and the CCPA take fundamentally different approaches to regulating when personal data can be processed.

Data processing under the GDPR

The GDPR requires data controllers to have a valid legal basis to process personal data. There are six legal bases under the regulation:

- Consent: When the data controller has obtained consent from the data subject that is ”freely given, specific, informed, and unambiguous.” Consent must be voluntary and explicit to be considered valid.

- Contract: When processing is necessary to fulfill or prepare a contract with the data subject.

- Legal obligation: To comply with an obligation under a law laid down by the EU or the member state that applies to the data controllers.

- Vital interests: When processing is necessary in the vital interests of the data subject or of another person, such as in an emergency.

- Public interest: When processing is necessary for tasks carried out in the interest of the public, or for tasks carried out by the data controllers as an official authority, as determined by the EU or the member state that applies to the data controllers.

- Legitimate interests: When data processing is essential for the legitimate interests of the data controller or a third party, provided that the rights and freedoms of the data subject don’t override a legitimate interest.

These legal bases establish clear conditions under which organizations can collect, store, and use personal data, in an effort to ensure that processing aligns with lawful purposes. Companies may be required by data protection authorities to provide proof to back up their legal basis, e.g. if they claim legitimate interests instead of obtaining valid user consent.

Data processing under the CCPA/CPRA

The CCPA/CPRA does not require businesses to establish a legal basis for processing personal information. Instead, businesses are free to collect and process data under most circumstances, provided they comply with the law’s consumer-focused mechanisms. These include giving consumers the right to:

- opt out of the sale or sharing of their personal information

- opt out of use of their personal data for targeted advertising or profiling

- limit the use and disclosure of sensitive personal information

Businesses must also transparently disclose processing purposes and practices under the regulation.

Rather than restricting data processing upfront, the CCPA/CPRA places responsibility on businesses to provide clear mechanisms and processes for consumers to exercise their rights and control how their data is used.

CCPA vs GDPR: what does consent look like?

The CCPA/CPRA and the GDPR differ significantly in their approaches to consent. The GDPR relies on explicit opt-in consent, while the CCPA/CPRA generally uses an opt-out model, with exceptions for specific cases.

Consent under the GDPR

Consent is one of the legal bases for processing personal data under the GDPR. The regulation requires data controllers to obtain explicit consent from users before collecting or processing their data.

Consent given must be “freely given, specific, informed, and unambiguous.” This means individuals need to actively agree to their data being processed by taking an action such as ticking a box on a form or selecting specific settings.

Consent cannot be assumed from pre-checked boxes, ignoring the consent mechanism, or inactivity. Further, each purpose for processing data requires separate consent, and individuals must be able to withdraw their consent at any time. The process for withdrawing must be as simple and accessible as the process for giving consent.

The age of consent under the GDPR is 16 years. For minors under 16, the GDPR requires consent to be obtained from a parent or legal guardian. However, the GDPR permits member states to lower the age of consent to as young as 13 through their national laws.

Consent under the CCPA/CPRA

The CCPA/CPRA does not require businesses to obtain opt-in consent to collect or process personal information in most cases. Instead, it operates primarily on an opt-out model, where businesses must provide clear methods for consumers to decline the sale or sharing of their information.

However, there are specific scenarios in which prior consent is required under the CCPA/CCPA:

- Collecting, selling, or sharing the personal information of minors requires opt-in consent. For minors between the ages of 13 and 16, consent must be obtained directly from the minor. For those under 13, consent must come from a parent or legal guardian.

- Selling or sharing the personal information of consumers who have previously opted out requires a business to obtain the consumer’s consent.

- If a consumer dictates that a business only use sensitive personal information to provide the goods and services it offers, the business cannot use or disclose this information for any other reason without the consumer’s consent.

- Entering a consumer into financial incentive programs tied to the collection or retention of personal information requires explicit consent.

For cases requiring consent, the CCPA/CPRA’s definition of consent closely aligns with the GDPR’s requirements: it must be freely given, specific, informed, and unambiguous.

CCPA vs GDPR: what are users’ rights?

Both the GDPR and the CCPA/CPRA grant individuals specific rights over their personal data, which enable them to understand, access, and control how their information is used.

Data subjects’ rights under the GDPR

Under the GDPR, data subjects are entitled to the following rights:

- Right to be informed: Individuals must be informed about how their data is collected, used, and shared, by whom, for what reason, and which third parties are receiving their data, if any.

- Right to access: Individuals can request confirmation of whether their data is being processed and obtain a copy of their data from the data controller.

- Right to rectification: Individuals can request corrections to incomplete or inaccurate personal data.

- Right to erasure (right to be forgotten): Individuals can ask for their personal data to be deleted under certain conditions, such as when it is no longer needed for its original purpose or when they withdraw consent, among others.

- Right to restrict processing: Individuals can request that their data isn’t processed in certain situations. These include instances when there is no legal basis for processing or the controller doesn’t require the data for the original purposes anymore, among others.

- Right to data portability: Individuals can receive the data collected on the basis of consent or contract in a structured, commonly used, and machine-readable format and transfer it to another controller.

- Right to object: Individuals can object to data processing on certain grounds, including when it is used for direct marketing purposes.

- Rights related to automated decision-making: Individuals can contest decisions made solely by automated processes that significantly affect them, such as profiling.

Consumers’ rights under the CCPA/CPRA

The CCPA/CPRA grants California residents the following rights over their personal information:

- Right to know and access: Consumers have a right to know what personal information is being collected about them, for what reason, and whether it is sold or shared. They also have a right to request a copy of their personal information collected by a business.

- Right to delete: Consumers can request that businesses delete their personal information, with some exceptions. For example, businesses do not have to delete data that is needed to comply with legal obligations.

- Right to correct: Consumers can request that inaccurate personal information be corrected.

- Right to opt out: Consumers can opt out of the sale or sharing of their personal information, as well as its use for targeted advertising or profiling. Businesses must include a “Do Not Sell Or Share My Personal Information” link on their websites.

- Right to limit: Consumers can limit the use or disclosure of their sensitive personal information for purposes other than obtaining the goods or services that the business provides.

- Right to nondiscrimination: Consumers are protected from being penalized or denied services for exercising their privacy rights under the regulation.

- Right to data portability: Consumers can request their personal information in a “structured, commonly used, machine‐readable format,” to transfer it to another service or business.

CCPA vs GDPR: transparency requirements

Both the GDPR and the CCPA/CPRA require businesses to provide transparency in their data handling practices, though they approach this requirement in different ways.

Transparency requirements under the GDPR

While the GDPR does not explicitly mandate publishing a privacy policy, it requires data controllers to provide detailed and specific information about their data processing policies in a way that is concise, transparent, and easy to understand. It must use clear and simple language, especially when communicating with children. This information should be easily accessible and provided in writing, electronically, or through other appropriate means. This requirement is typically achieved through a privacy policy published on a data controller’s website, often located in the footer so that it is easily accessible on every page.

A GDPR-compliant privacy policy must include:

- the data controller’s identity and contact information, and, if applicable, the contact details of the Data Protection Officer

- purpose(s) of and legal bases for data processing

- who will have access to the personal data

- categories of personal data being processed

- whether the data will be transferred internationally and the safeguards in place if so

- for how long the data will be retained

- information on data subjects’ rights and how to exercise them, as well as the right to lodge a complaint with a supervisory authority

- how to withdraw consent

Transparency requirements under the CCPA/CPRA

The CCPA/CPRA requires businesses to provide specific notices to consumers to ensure transparency about how their personal information is used.

Notice at or before the point of collection

Businesses are required to inform consumers about the personal information they collect at or before the time it is collected. This includes details on what types of information (including sensitive personal information, if any) are being collected, the purpose(s) of collection, how long they will keep the information, and whether it will be sold or shared. If the business sells personal information, the notice must include a “Do Not Sell Or Share My Personal Information” link so users can easily opt out. The notice should also provide a link to the business’s privacy policy, where consumers can find more detailed information about their rights and the company’s privacy practices.

Privacy policy

Businesses must have a privacy policy that is easy to access and includes:

- a list of the types of personal information the business collects, sells, or shares, which is updated at least once per year

- where the business collects the personal information from

- business or commercial purposes for collecting, selling, or sharing personal information

- who the business shares or discloses the information with

- what rights consumers have under the CCPA/CPRA and how they can exercise them

- a “Do Not Sell Or Share My Personal Information” link that takes consumers to a page where they can opt out of their information being sold or shared

- a “Limit The Use Of My Sensitive Personal Information” link (if applicable) so consumers can control how their sensitive information is used.

The privacy policy must be updated once every 12 months or when there are changes to privacy practices. It must be written in plain, simple language that the average person could understand, and it must be accessible to all readers, including those with disabilities.

CCPA vs GDPR: security requirements

Both the CCPA/CPRA and the GDPR require entities that process data to take steps to secure the personal information they collect, though their specific obligations differ.

Security requirements under the GDPR

Keeping personal data secure is a foundational principle of processing under the GDPR. The regulation requires that personal data is processed in a way that keeps it safe by protecting it against unauthorized or unlawful processing as well as accidental loss, destruction, or damage.

Controllers and processors are required to adopt technical and organizational security measures that are suitable to the risks posed to personal data. These measures may include pseudonymization, encryption, and robust access controls to prevent unauthorized processing.

Controllers are required to conduct Data Protection Impact Assessments (DPIAs) for processing activities likely to result in high risks to individuals’ rights and freedoms, such as profiling, large-scale processing, or handling sensitive data. These assessments identify potential risks and determine the safeguards needed to mitigate them.

Security requirements under the CCPA/CPRA

The CCPA as it was originally passed did not include specific security requirements. The CPRA’s amendments to the regulation introduced provisions to address data protection more directly.

The CCPA/CPRA now requires businesses that collect consumers’ personal information to implement reasonable security measures appropriate to the nature of the personal information. These measures aim to protect against unauthorized or illegal access, destruction, use, modification, or disclosure.

For data processing activities that pose significant risks to privacy or security, businesses must conduct regular risk assessments and annual cybersecurity audits. These reviews assess factors like how sensitive personal information is used and the possible effects on consumer rights, balanced against the purpose of the data processing. While the CPRA outlines these obligations, the exact requirements businesses must follow are still being defined.

CCPA vs GDPR: enforcement and penalties

Both the GDPR and the CCPA/CPRA include enforcement mechanisms and penalties to ensure compliance, but the process and scale differ significantly between the two laws.

Enforcement and penalties under the GDPR

Each EU member state enforces the GDPR through its own Data Protection Authority (DPA), an independent public body responsible for overseeing compliance. DPAs have the authority to investigate compliance, address complaints, and impose penalties for violations. Data subjects can lodge complaints with a DPA in their country of residence, workplace, or where the violation occurred.

GDPR penalties are among the highest globally for data protection violations. Fines are divided into two tiers based on the severity of the offense:

- for less severe violations, fines are up to 2 percent of annual global turnover or EU 10 million, whichever is higher

- for more serious violations, fines can be up to 4 percent of annual global turnover or EU 20 million, whichever is higher

Enforcement and penalties under the CCPA/CPRA

The CCPA/CPRA are enforced by both the California Attorney General (AG) and the California Privacy Protection Agency (CPPA), a new enforcement body that was established under the CPRA. The CPPA has the authority to investigate violations and impose penalties, but it cannot limit the AG’s enforcement powers. The CPPA must halt its investigation if the AG requests, and businesses cannot be penalized by both authorities for the same violation.

Penalties under the CCPA/CPRA include:

- up to USD 2,663 for each unintentional violation

- up to USD 7,988 for each intentional violation

- up to USD 7,988 for each violation involving the personal information of minors

The regulation also provides consumers with the right to take legal action in the event of a data breach. Consumers can claim statutory damages of USD 107 to USD 799 per incident or seek actual damages, whichever is greater, along with injunctive relief. Private rights of action are limited to data breaches, while civil penalties apply only to violations pursued by the AG or CPPA.

CCPA vs GDPR: how to comply

The first step toward compliance is determining whether your organization collects personal data or personal information from individuals protected under these laws. For California residents, businesses must also confirm whether they meet the legal definition of a “business” under the CCPA/CPRA.

We strongly recommend consulting a qualified legal expert who can give you advice specific to your organization to achieve compliance with both data privacy regulations.

GDPR compliance

Here is a non-exhaustive list of steps to take for GDPR compliance:

- create a privacy policy that clearly outlines data collection, processing, and storage practices

- obtain specific, informed, and freely given consent from data subjects before collecting or processing personal data

- maintain detailed records of all data processing activities, including the purposes, data categories, and data retention periods

- enter into Data Processing Agreements (DPAs) with data processors, setting out clear terms for processing activities and mandating compliance with GDPR standards

A consent management platform (CMP) can simplify compliance with the GDPR’s consent and record-keeping requirements. CMPs enable businesses to collect and document explicit user consent for data processing, including cookies, in a manner that aligns with GDPR standards. They can also help maintain records of consent, link these to processing activities, and integrate cookie banners to promote transparency.

CCPA/CPRA compliance

To comply with the CCPA/CPRA, businesses should focus on the following key actions:

- provide a notice at or before the point of data collection that details what personal and sensitive information is collected, how it will be used, and whether it will be sold or shared

- ensure your privacy policy includes information about what data categories are collected and for what purpose, as well as instructions on how consumers can exercise their rights

- add visible opt-out links to your website — labelled “Do Not Sell Or Share My Personal Information” and “Limit The Use Of My Sensitive Personal Information” — to enable consumers to exercise their opt-out rights

- provide at least two ways for consumers to exercise their rights, such as a toll-free phone number or a web form

- secure opt-in consent for selling or sharing data for individuals between the ages of 13 to 16, and obtain consent from a parent or guardian for minors under 13

- ensure your organization does not penalize or discriminate against consumers who exercise their privacy rights

A CMP can enable businesses to implement opt-out mechanisms for the sale or sharing of personal information and manage limitations on sensitive personal information. CMPs also make it easier to display a notice at the point of collection through a cookie banner to inform consumers about data collection practices.

Usercentrics does not provide legal advice, and information is provided for educational purposes only. We always recommend engaging qualified legal counsel or privacy specialists regarding data privacy and protection issues and operations.

The California Consumer Privacy Act (CCPA) and the California Privacy Rights Act (CPRA) are consumer privacy laws that aim to safeguard California residents’ personal information. Businesses that operate in California must understand these regulations to protect consumer privacy, maintain trust, and avoid potential litigation and penalties.

In this guide, we look at the California privacy laws, the changes introduced by the CPRA, and how businesses can achieve compliance.

What is the CCPA and CPRA?

The California Consumer Privacy Act (CCPA) passed in 2018 and has been in effect since January 1, 2020. It’s the first comprehensive consumer privacy law passed in the US. It grants California’s nearly 40 million residents greater control over their personal information and imposes obligations on businesses that handle this information.

The California Privacy Rights Act (CPRA), approved by ballot on November 3, 2020, does not entirely replace the CCPA. Instead, the CPRA strengthens and expands the CCPA with enhanced protections for the state’s residents, known as “consumers” under the laws, and new obligations for businesses. The CPRA went into effect on January 1, 2023, but legal challenges delayed enforcement until February 2024.

The CPRA brings the California privacy law closer to the European Union’s General Data Protection Regulation (GDPR) in some ways. Together, the two California privacy laws are often referred to as “the CCPA, as amended by the CPRA” or simply the “CCPA/CPRA.”

Understanding the CCPA

The CCPA set a new standard for consumer data privacy in the US, empowering California residents with control over their personal information and requiring businesses to comply with strict data handling practices.

Who must comply with the CCPA?

For-profit businesses operating in California must comply with the CCPA if they:

- collect or process the personal information of California residents

and

- meet at least one of the following thresholds:

- have annual gross revenues exceeding USD 26,625,000

- handle personal information of 50,000 or more consumers, households, or devices

- earn more than 50 percent of their annual revenue from selling consumers’ personal information

Importantly, the law has extraterritorial jurisdiction, meaning it applies to businesses outside California if they meet these criteria. Under the CPRA there have been changes to these criteria, outlined below.

Who does the CCPA protect?

The CCPA protects individuals who meet the following legal definition of a California resident:

- those who are in the state for purposes other than a temporary or transitory reason

- those who are domiciled in California while temporarily outside the state, such as for vacation or work

Individuals who meet this legal definition remain protected even when they are temporarily outside the state. However, individuals who are only temporarily in California, e.g. for vacation, are not protected under the law.

The definition of who qualifies as a California resident may shift as courts interpret the CCPA in response to legal challenges and privacy lawsuits.

What does the CCPA protect?