The United States does not have a comprehensive federal data privacy law that governs how businesses access or use individuals’ personal information. Instead, privacy protections and regulation are currently left to individual states. California led the way in 2020 with the California Consumer Privacy Act (CCPA), later strengthened by the California Privacy Rights Act (CPRA). As of January 2025, 20 states have passed similar laws. The variances in consumers’ rights, companies’ responsibilities, and other factors makes compliance challenging for businesses operating in multiple states.

The American Data Privacy and Protection Act (ADPPA) sought to simplify privacy compliance by establishing a comprehensive federal privacy standard. The ADPPA emerged in June 2022 when Representative Frank Pallone introduced HR 8152 to the House of Representatives. The bill gained strong bipartisan support in the House Energy and Commerce Committee, passing with a 53-2 vote in July 2022. It also received amendments in December 2022. However, the bill did not progress any further.

As proposed, the ADPPA would have preempted most state-level privacy laws, replacing the current multi-state compliance burden with a single federal standard.

In this article, we’ll examine who the ADPPA would have applied to, its obligations for businesses, and the rights it would have granted US residents.

What is the American Data Privacy and Protection Act (ADPPA)?

The American Data Privacy and Protection Act (ADPPA) was a proposed federal bill that would have set consistent rules for how organizations handle personal data across the United States. It aimed to protect individuals’ privacy with comprehensive safeguards while requiring organizations to meet strict standards for handling personal data.

Under the ADPPA, an individual is defined as “a natural person residing in the United States.” Organizations that collect, use, or share individuals’ personal data would have been responsible for protecting it, including measures to prevent unauthorized access or misuse. By balancing individual rights and business responsibilities, the ADPPA sought to create a clear and enforceable framework for privacy nationwide.

What data would have been protected under the American Data Privacy and Protection Act (ADPPA)?

The ADPPA aimed to protect the personal information of US residents, which it refers to as covered data. Covered data is broadly defined as “information that identifies or is linked, or reasonably linkable, alone or in combination with other information, to an individual or a device that identifies or is linked or reasonably linkable to an individual.” In other words, any data that would either identify or could be traced to a person or to a device that is linked to an individual. This includes data that may be derived from other information and unique persistent identifiers, such as those used to track devices or users across platforms.

The definition excludes:

- Deidentified data

- Employee data

- Publicly available information

- Inferences made exclusively from multiple separate sources of publicly available information, so long as they don’t reveal private or sensitive details about a specific person

Sensitive covered data under the ADPPA

The ADPPA, like other data protection regulations, would have required stronger safeguards for sensitive covered data that could harm individuals if it was misused or unlawfully accessed. The bill’s definition of sensitive covered data is extensive, going beyond many US state-level data privacy laws.

Protected categories of data include, among other things:

- Personal identifiers, including government-issued IDs like Social Security numbers and driver’s licenses, except when legally required for public display.

- Health information, including details about past, present, or future physical and mental health conditions, treatments, disabilities, and diagnoses.

- Financial data, such as account numbers, debit and credit card numbers, income, and balance information. The last four digits of payment cards are excluded.

- Private communications, such as emails, texts, calls, direct messages, voicemails, and their metadata. This does not apply if the device is employer-provided and individuals are given clear notice of monitoring.

- Behavioral data, including sexual behavior information when collected against reasonable expectations, video content selections, and online activity tracking across websites.

- Personal records, such as private calendars, address books, photos, and recordings, except on employer-provided devices with notice.

- Demographic details, including race, color, ethnicity, religion, and union membership.

- Biological identifiers, including biometric information and genetic information, precise location data, login credentials, and information about minors.

- Security credentials, login details or security or access codes for an account or device.

Who would the American Data Privacy and Protection Act (ADPPA) have applied to?

The ADPPA would have applied to a broad range of entities that handle covered data.

Covered entity under the ADPPA

A covered entity is “any entity or any person, other than an individual acting in a non-commercial context, that alone or jointly with others determines the purposes and means of collecting, processing, or transferring covered data.” This definition matches similar terms like “controller” in US state privacy laws and the European Union’s General Data Protection Regulation (GDPR). To qualify as a covered entity under the ADPPA, the organization would have had to be in one of three categories:

- Businesses regulated by the Federal Trade Commission Act (FTC Act)

- Telecommunications carriers

- Nonprofits

Although the bill did not explicitly address international jurisdiction, its reach could have extended beyond US borders. Foreign companies would have needed to comply if they handle US residents’ data for commercial purposes and meet the FTC Act’s jurisdictional requirements, such as conducting business activities in the US or causing foreseeable injury within the US. This type of extraterritorial scope is common among a number of other international data privacy laws.

Service provider under the ADPPA

A service provider was defined as a person or entity that engages in either of the following:

- Collects, processes, or transfers covered data on behalf of a covered entity or government body

OR

- Receives covered data from or on behalf of a covered entity of government body

This role mirrors what other data protection laws call a processor, including most state privacy laws and the GDPR.

Large data holders under the ADPPA

Large data holders were not considered a third type of organization. Both covered entities and service providers could have qualified as large data holders if, in the most recent calendar year, they had gross annual revenues of USD 250 million or more, and collected, processed, or transferred:

- Covered data of more than 5,000,000 individuals or devices, excluding data used solely for payment processing

- Sensitive covered data from more than 200,000 individuals or devices

Large data holders would have faced additional requirements under the ADPPA.

Third-party collecting entity under the ADPPA

The ADPPA introduced the concept of a third-party collecting entity, which refers to a covered entity that primarily earns its revenue by processing or transferring personal data it did not collect directly from the individuals to whom the data relates. In other contexts, they are often referred to as data brokers.

However, the definition excluded certain activities and entities:

- A business would not be considered a third-party collecting entity if it processed employee data received from another company, but only for the purpose of providing benefits to those employees

- A service provider would also not be classified as a third-party collecting entity under this definition

An entity is considered to derive its principal source of revenue from data processing or transfer if, in the previous 12 months, either:

- More than 50 percent of its total revenue came from these activities

or

- The entity processed or transferred the data of more than 5 million individuals that it did not collect directly

Third-party collecting entities that process data from more than 5,000 individuals or devices in a calendar year would have had to register with the Federal Trade Commission by January 31 of the following year. Registration would require a fee of USD 100 and basic information about the organization, including its name, contact details, the types of data it handles, and a link to a website where individuals can exercise their privacy rights.

Exemptions under the ADPPA

While the ADPPA potentially would have had a wide reach, certain exemptions would have applied.

- Small businesses: Organizations with less than USD 41 million in annual revenue or those that process data for fewer than 50,000 individuals would be exempt from some provisions.

- Government entities: The ADDPA would not apply to government bodies or their service providers handling covered data. It also excluded congressionally designated nonprofits that support victims and families with issues involving missing and exploited children.

- Organizations subject to other federal laws: Organizations already complying with certain existing privacy laws, including the Health Insurance Portability and Accountability Act (HIPAA), the Gramm-Leach-Bliley Act (GLBA), and the Family Educational Rights and Privacy Act (FERPA), among others, were deemed compliant with similar ADPPA requirements for the specific data covered by those laws. However, they would have still been required to comply with Section 208 of the ADPPA, which contains provisions for data security and protection of covered data.

Definitions in the American Data Privacy and Protection Act (ADPPA)

Like other data protection laws, the ADPPA defined several terms that are important for businesses to know. While many — like “collect” or “process” — can be found in other regulations, there are also some that are unique to the ADPPA. We look at some of these key terms below.

Knowledge under the ADPPA

“Knowledge” refers to whether a business is aware that an individual is a minor. The level of awareness required depends on the type and size of the business.

- High-impact social media companies: These are large platforms that are primarily known for user-generated content. They would have to have at least USD 3 billion in annual revenue and 300 million monthly active users over 3 months in the preceding year. They would be considered to have knowledge if they were aware or should have been aware that a user was a minor. This is the strictest standard.

- Large data holders: These are organizations that have significant data operations but do not qualify as high-impact social media. They have knowledge if they knew or willfully ignored evidence that a user was a minor.

- Other covered entities or service providers: Those that do not fall into the above categories are required to have actual knowledge that the user is a minor.

Some states — like Minnesota and Nebraska — define “known child” but do not adjust the criteria for what counts as knowledge based on the size or revenue of the business handling the data. Instead, they apply the same standard to all companies, regardless of their scale.

Affirmative express consent under the GDPR

The ADPPA uses the term “affirmative express consent,” which refers to “an affirmative act by an individual that clearly communicates the individual’s freely given, specific, and unambiguous authorization” for a business to perform an action, such as collecting or using their personal data. Consent for data collection would have to be obtained after the covered entity provides clear information about how it will use the data.

Like the GDPR and other data privacy regulations, consent would have needed to be freely given, informed, specific, and unambiguous.

Under this definition, consent cannot be inferred from an individual’s inaction or continued use of a product or service. Additionally, covered entities cannot trick people into giving consent through misleading statements or manipulative design. This includes deceptive interfaces meant to confuse users or limit their choices.

Transfer under the ADPPA

Most data protection regulations include a definition for the sale of personal data or personal information. While the ADPPA did not define sale, it instead defined “transfer” as “to disclose, release, disseminate, make available, license, rent, or share covered data orally, in writing, electronically, or by any other means.”

What are consumers’ rights under the American Data Privacy and Protection Act (ADPPA)?

Under the ADPPA, consumers would have had the following rights regarding their personal data.

- Right of awareness: The Commission must publish and maintain a webpage describing the provisions, rights, obligations, and requirements of the ADPPA for individuals, covered entities, and service providers. This information must be:

- Published within 90 days of the law’s enactment

- Updated quarterly as needed

- Available in the ten most commonly used languages in the US

- Right to transparency: Covered entities must provide clear information about how consumer data is collected, used, and shared. This includes which third parties would receive their data and for what purposes.

- Right of access: Consumers can access their covered data (including data collected, processed, or transferred within the past 24 months), categories of third parties and service providers who received the data, and the purpose(s) for transferring the data.

- Right to correction: Consumers can correct any substantial inaccuracies or incomplete information in their covered data and instruct the covered entity to notify all third parties or service providers that have received the data.

- Right to deletion: Consumers can request that their covered data processed by the covered entity be deleted. They can also instruct the covered entity to notify all third parties or service providers that have received the data of the deletion request.

- Right to data portability: Consumers can request their personal data in a structured, machine-readable format that enables them to transfer it to another service or organization.

- Right to opt out: Consumers can opt out of the transfer of their personal data to third parties and its use for targeted advertising. Businesses are required to provide a clear and accessible mechanism for exercise of this right.

- Private right of action: Consumers can sue companies directly for certain violations of the act, with some limitations and procedural requirements. (California is the only state to provide this right as of early 2025.)

What are privacy requirements under the American Data Privacy and Protection Act (ADPPA)?

The ADPPA would have required organizations to meet certain obligations when handling individuals’ covered data. Here are the key privacy requirements under the bill.

Consent

Organizations must obtain clear, explicit consent through easily understood standalone disclosures. Consent requests must be accessible, available in all service languages, and give equal prominence to accept and decline options. Organizations must provide mechanisms to withdraw consent that are as simple as giving it.

Organizations must avoid using misleading statements or manipulative designs, and must obtain new consent for different data uses or significant privacy policy changes. While the ADPPA works alongside the Children’s Online Privacy Protection Act (COPPA)’s parental consent requirements for children under 13, it adds its own protections for minors up to age 17.

Privacy policy

Organizations must maintain clear, accessible privacy policies that detail their data collection practices, transfer arrangements, retention periods, and rights granted to individuals. These policies must specify whether data goes to countries like China, Russia, Iran, or North Korea, which could present a security risk, and they must be available in all languages where services are offered. When making material changes, organizations must notify affected individuals in advance and give them a chance to opt out.

Data minimization

Organizations can only collect and process data that is reasonably necessary to provide requested services or for specific allowed purposes. These allowed purposes include activities like completing transactions, maintaining services, protecting against security threats, meeting legal obligations, and preventing harm or if there is a risk of death, among others. Collected data must also be proportionate to these activities.

Privacy by design

Privacy by design is a default requirement under the ADPPA. Organizations must implement reasonable privacy practices that consider the organization’s size, data sensitivity, available technology, and implementation costs. They must align with federal laws and regulations and regularly assess risks in their products and services, paying special attention to protecting minors’ privacy and implementing appropriate safeguards.

Data security

Organizations must establish, implement, and maintain appropriate security measures, including vulnerability assessments, preventive actions, employee training, and incident response plans. They must implement clear data disposal procedures and match their security measures to their data handling practices.

Privacy and data security officers

Organizations with more than 15 employees must appoint both a privacy officer and data security officer, who must be two distinct individuals. These officers are responsible for implementing privacy programs and maintaining ongoing ADPPA compliance.

Privacy impact assessments

Organizations — excluding large data holders and small businesses — must conduct regular privacy assessments that evaluate the benefits and risks of their data practices. These assessments must be documented and maintained, and consider factors like data sensitivity and potential privacy impacts.

Loyalty with respect to pricing

Organizations cannot discriminate against individuals who exercise their privacy rights. While they can adjust prices based on necessary financial information and offer voluntary loyalty programs, they cannot retaliate through changes in pricing or service quality, e.g. if an individual exercises their rights and requests their data or does not consent to certain data processing.

Special requirements for large data holders

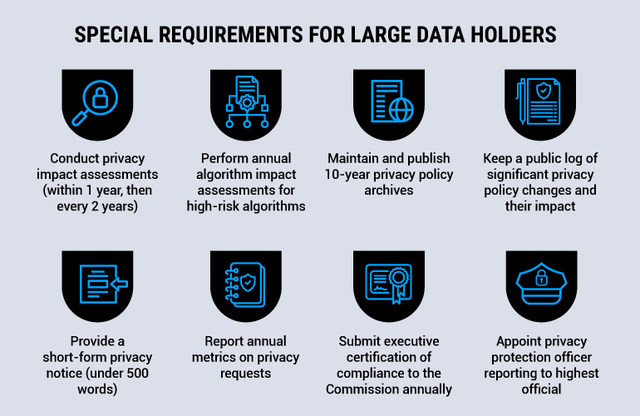

In addition to their general obligations, large data holders would have had unique responsibilities under the proposed law.

Privacy policy

Large data holders would have been required to maintain and publish 10-year archives of their privacy policies on their websites. They would need to keep a public log documenting significant privacy policy changes and their impact. Additionally, they would need to provide a short-form notice (under 500 words) highlighting unexpected practices and sensitive data handling.

Privacy and data security officers

At least one of the appointed officers would have been designated as a privacy protection officer who reports directly to the highest official at the organization. This officer, either directly or through supervised designees, would have been required to do the following:

- Establish processes to review and update privacy and security policies, practices, and procedures

- Conduct biennial comprehensive audits to ensure compliance with the proposed law and make them accessible to the Commission upon request

- Develop employee training programs about ADPPA compliance

- Maintain detailed records of all material privacy and security practices

- Serve as the point of contact for enforcement authorities

Privacy impact assessments

While all organizations other than small businesses would be required to conduct privacy impact assessments under the proposed law, large data holders would have had additional requirements.

- Timing: While other organizations must conduct assessments within one year of the ADPPA’s enactment, large data holders would have been required to do so within one year of either becoming a large data holder or the law’s enactment, whichever came first.

- Scope: Both must consider nature and volume of data and privacy risks, but large data holders would need to specifically assess “potential adverse consequences” in addition to “substantial privacy risks.”

- Approval: Large data holders’ assessments would need to be approved by their privacy protection officer, while other entities would have no specific approval requirement.

- Technology review: Large data holders would need to include reviews of security technologies (like blockchain and distributed ledger), this review would be optional for other entities.

- Documentation: While both would need to maintain written assessments until the next assessment, large data holders’ assessments would also need to be accessible to their privacy protection officer.

Metrics reporting

Large data holders would be required to compile and disclose annual metrics related to verified access, deletion, and opt-out requests. These metrics would need to be included in their privacy policy or published on their website.

Executive certification

An executive officer would have been required to annually certify to the FTC that the large data holder has internal controls and a reporting structure in place to achieve compliance with the proposed law.

Algorithm impact assessments

Large data holders using covered algorithms that could pose a consequential risk of harm would be required to conduct an annual impact assessment of these algorithms. This requirement would be in addition to privacy impact assessments and would need to begin no later than two years after the Act’s enactment.

American Data Privacy and Protection Act (ADPPA) enforcement and penalties for noncompliance

The ADPPA would have established a multi-layered enforcement approach that set it apart from other US privacy laws.

- Federal Trade Commission: The FTC would serve as the primary enforcer, treating violations as unfair or deceptive practices under the Federal Trade Commission Act. The proposed law required the FTC to create a dedicated Bureau of Privacy for enforcement.

- State Attorneys General: State Attorneys General and State Privacy Authorities could bring civil actions on behalf of their residents if they believed violations had affected their state’s interest.

- California Privacy Protection Authority (CPPA): The CPPA, established under the California Privacy Rights Act, would have special enforcement authority. The CPPA could enforce the ADPPA in California in the same manner as it enforces California’s privacy laws.

Starting two years after the law would have taken effect, individuals would gain a private right of action, or the right to sue for violations. However, before filing a lawsuit, they would need to notify both the Commission and their state Attorney General.

The ADPPA itself did not establish specific penalties for violations. Instead, violations of the ADPPA or its regulations would be treated as violations of the Federal Trade Commission Act, subject to the same penalties, privileges, and immunities provided under that law.

The American Data Privacy and Protection Act (ADPPA) compared to other data privacy regulations

As privacy regulations continue to evolve worldwide, it’s helpful to understand how the ADPPA would compare with other comprehensive data privacy laws.

The EU’s GDPR has set the global standard for data protection since 2018. In the US, the CCPA (as amended by the CPRA) established the first comprehensive state-level privacy law and has influenced subsequent state legislation. Below, we’ll look at how the ADPPA compares with these regulations.

The ADPPA vs the GDPR

There are many similarities between the proposed US federal privacy law and the EU’s data protection regulation. Both require organizations to implement privacy and security measures, provide individuals with rights over their personal data (including access, deletion, and correction), and mandate clear privacy policies that detail their data processing activities. Both also emphasize data minimization principles and purpose limitation.

However, there are also several important differences between the two.

| Aspect | ADPPA | GDPR |

|---|---|---|

| Territorial scope | Would have applied to individuals residing in the US. | Applies to EU residents and any organization processing their data, regardless of location. |

| Consent | Not a standalone legal basis; required only for specific activities like targeted advertising and processing sensitive data. | One of six legal bases for processing; can be a primary justification. |

| Government entities | Excluded federal, state, tribal, territorial and local government entities. | Applies to public bodies and authorities. |

| Privacy officers | Required “privacy and security officers” for covered entities with more than 15 employees, with stricter rules for large data holders. | Requires a Data Protection Officer (DPO) for public authorities or entities engaged in large-scale data processing. |

| Data transfers | No adequacy requirements; focus on transfers to specific countries (China, Russia, Iran, North Korea). | Detailed adequacy requirements and transfer mechanisms. |

| Children’s data | Extended protections to minors up to age 17. | Focuses on children under 16 (can be lowered to 13 by member states). |

| Penalties | Violations would have been treated as violations of the Federal Trade Commission Act. | Imposes fines up to 4% of annual global turnover or €20 million, whichever is higher. |

The ADPPA vs the CCPA/CPRA

There are many similarities between the proposed US federal privacy law and California’s existing privacy framework. Both include comprehensive transparency requirements, including privacy notices in multiple languages and accessibility for people with disabilities. They also share similar approaches to prohibiting manipulative design practices and requirements for regular security and privacy assessments.

However, there are also differences between the ADPPA and CCPA/CPRA.

| Aspect | ADPPA | CCPA/CPRA |

|---|---|---|

| Covered entities | Would have applied to organizations under jurisdiction of the Federal Trade Commission, including nonprofits and common carriers; excluded government agencies. | Applies only to for-profit businesses meeting any of these specific thresholds:gross annual revenue of over USD 26,625,000receive, buy, sell, or share personal information of 100,000 or more consumers or householdsearn more than half of their annual revenue from the sale of consumers’ personal information |

| Private right of action | Broader right to sue for various violations. | Limited to data breaches only. |

| Data minimization | Required data collection and processing to be limited to what is reasonably necessary and proportionate. | Similar requirement, but the CPRA allows broader processing for “compatible” purposes. |

| Algorithmic impact assessments | Required large data holders to conduct annual assessments focusing on algorithmic risks, bias, and discrimination. | Requires risk assessments weighing benefits and risks of data practices, with no explicit focus on bias. |

| Executive accountability | Required executive certification of compliance. | No executive certification requirement. |

| Enforcement | Would have been enforced by the Federal Trade Commission, State Attorney Generals, and the California Privacy Protection Authority (CPPA). | CPPA and local authorities within California. |

Consent management and the American Data Privacy and Protection Act (ADPPA)

The ADPPA would have required organizations to obtain affirmative express consent for certain data processing activities through clear, conspicuous standalone disclosures. These consent requests would need to be easily understood, equally prominent for either accepting or declining, and available in all languages where services are offered. Organizations would also need to provide simple mechanisms for withdrawing consent that would be as easy to use as giving consent was initially. The bill also required organizations to honor opt-out requests for practices like targeted advertising and certain data transfers. These opt-out mechanisms would need to be accessible and easy to use, with clear instructions for exercising these rights.

Organizations would need to clearly disclose not only the types of data they collect but also the parties with whom this information is shared. Consumers would also need to be informed about their data rights and how to act on them, such as opting out of processing, through straightforward explanations and guidance.

To support transparency, organizations would also be required to maintain privacy pages that are regularly updated to reflect their data collection, use, and sharing practices. These pages would help provide consumers with access to the latest information about how their data is handled. Additionally, organizations would have been able to use banners or buttons on websites and apps to inform consumers about data collection and provide them with an option to opt out.

Though the ADPPA was not enacted, the US does have an increasing number of state-level data privacy laws. A consent management platform (CMP) like the Usercentrics CMP for website consent management or app consent management can help organizations streamline compliance with the many existing privacy laws in the US and beyond. The CMP securely maintains records of consent, automates opt-out processes, and enables consistent application of privacy preferences across an organization’s digital properties. It also helps to automate the detection and blocking of cookies and other tracking technologies that are in use on websites and apps.

Usercentrics does not provide legal advice, and information is provided for educational purposes only. We always recommend engaging qualified legal counsel or privacy specialists regarding data privacy and protection issues and operations.

How far can companies go to get a user’s consent? When does inconvenience or questionable user experience tip over into legally noncompliant manipulation? These continue to be important questions across the data privacy landscape, especially with mobile apps, an area where regulatory scrutiny and enforcement have been ramping up.

French social networking app BeReal requests users’ consent to use their data for targeted advertising, which is very common. However, how they go about presenting (and re-presenting) that request has led to a complaint against them relating to their GDPR compliance. Let’s look at what BeReal is doing to get user consent, what the complaint is, and the legal basis for it.

BeReal’s consent request: A false sense of choice?

According to noyb’s complaint, BeReal introduced a new consent banner feature for European users in July 2024. The contention is that this banner requested user consent for use of their data for targeted advertising, which is not unusual or problematic in itself. However, the question is whether the banner provides users with real consent choice or not.

Based on the description from the complaint, BeReal designed their banner to be displayed to users when they open the app. If a user accepts the terms — giving consent for data use for targeted advertising — then they never see the banner again. However, if a user declines consent, the banner allegedly reappears every day when users attempt to post on the app. As the app requires users to snap photos multiple times a day, seeing a banner display every time one tries to do so could be understandably frustrating.

In addition to resulting in an annoying user experience, this alleged action is also potentially a GDPR violation. It’s questionable if user consent under these described conditions is actually freely given.

The GDPR does require organizations to ask users for consent again if, for example, there have been changes in their data processing operations, like they want to collect new data, or want to use data for a new purpose.

It’s also recommended that organizations refresh user consent data from time to time, even though the GDPR doesn’t specify an exact time frame, as some other laws and guidelines do. For example, a company could ask users for consent for specific data uses every 12 months, either to ensure consent is still current, or to see if users who previously declined have changed their minds.

The noyb complaint against BeReal

In December 2024, privacy advocacy group noyb (the European Center for Digital Rights) filed a complaint against BeReal with French data protection authority Commission Nationale de l’Informatique et des Libertés (CNIL), arguing that the company’s alleged repeated banner displays for non-consenting users are a form of “nudging” or use of dark patterns.

The CNIL is one of the EU data protection authorities that has previously announced increased enforcement of data privacy for mobile apps, and released guidelines for better privacy protection for mobile apps in September 2024.

While regulators have increasingly taken a dim view of various design manipulations to obtain users’ consent, like hiding the “reject” option, noyb argues BeReal’s actions are a new dark pattern trend: “annoying people into consent”. Simply put, they contend that BeReal does not take no for an answer, meaning consent obtained through this repeated tactic is not freely given, and thus is a clear violation of the GDPR’s requirements.

The noyb legal team has requested that the CNIL order BeReal to delete the personal data of affected users, modify its consent practices to be GDPR-compliant, and impose an administrative fine as a deterrent to other companies that may consider similar tactics.

European regulators take a dim view of manipulations to obtain user consent

Whether it’s making users go hunting to find the “reject” button (or removing it entirely), or wearing them down with constant banner displays until they give in and consent to the requested data use, the European Data Protection Board (EDPB) has seen and addressed similar issues before.

It’s generally understood that users are likely to give in over time out of fatigue or frustration and consent to the requested data use. Companies get what they want, but not in a way that is voluntary or a good user experience. The EDPB has emphasized that in addition to being specific, informed, and unambiguous, consent must be freely given. Persistent prompts can be a form of coercion, and thus consent received that way may not be legally valid (Art. 4 GDPR).

As technologies change over time, the ways in which dark patterns can be deployed to manipulate users into giving consent are likely to further evolve and become more sophisticated.

A fine balance: Data monetization and privacy compliance

It is a common challenge for companies to try to find ways to increase consent rates for access to user data to drive monetization strategies via their websites, apps, and other connected platforms. Cases like the one against BeReal could potentially set the tone for regulators’ increasingly stringent expectations for online platforms’ data operations, and the company could serve as a cautionary tale for others considering questionable tactics where user privacy is concerned.

As more individuals around the world are protected by more data privacy laws, what data companies are allowed to access and under what circumstances is becoming more strictly controlled. Thus the increasing challenge for companies that need data for advertising, analytics, personalization, and additional uses to grow their businesses.

Fortunately, there is a way to strike a balance between data privacy and data-driven business. With clear, user-friendly consent management, a shift to reliance on zero- and first-party data, and embracing Privacy-Led Marketing by employing preference management and other strategies to foster engagement and long-term customer satisfaction and loyalty.

How Usercentrics helps

Good consent practices require making user experience better, not more frustrating. Usercentrics App CMP helps your company deliver, building trust with users and providing a smooth, friendly user experience for consent management. You can obtain higher consent rates while achieving and maintaining privacy compliance.

Simple, straightforward setup for technical and non-technical teams automates integration of your vendors, SSPs, and SDKs with the App Scanner. We provide over 2,200 pre-built legal templates so you can provide clear, comprehensive consent choices to your users.

With extensive customization, you can make sure your banners fit your app or game’s design and branding and provide key information, enabling valid user consent without getting in their way or causing frustration. And you also get our expert guidance and detailed documentation every step of the way.

Oregon was the twelfth state in the United States to pass comprehensive data privacy legislation with SB 619. Governor Tina Kotek signed the bill into law on July 18, 2023, and the Oregon Consumer Privacy Act (OCPA) came into effect for most organizations on July 1, 2024. Nonprofits have an extra year to prepare, so their compliance is required as of July 1, 2025.

In this article, we’ll look at the Oregon Consumer Privacy Act’s requirements, who they apply to, and what businesses can do to achieve compliance.

What is the Oregon Consumer Privacy Act (OCPA)?

The Oregon Consumer Privacy Act protects the privacy and personal data of over 4.2 million Oregon residents. The law establishes rules for any individual or entity conducting business in Oregon or those providing goods and services to its residents and processing their personal data. Affected residents are known as “consumers” under the law.

The OCPA protects Oregon residents’ personal data when they act as individuals or in household contexts. It does not cover personal data collected in a work context. This means information about individuals acting in their professional roles, rather than as consumers, is not covered under this law.

Consistent with the other US state-level data privacy laws, the OCPA requires businesses to inform residents about how their personal data is collected and used. This notification — usually included in a website’s privacy policy — must cover key details such as:

- What data is collected

- How the data is used

- Whether the data is shared and with whom

- Information about consumers’ rights

The Oregon privacy law uses an opt-out consent model, which means that in most cases, organizations can collect consumers’ personal data without prior consent. However, they must make it possible for consumers to opt out of the sale of their personal data and its use in targeted advertising or profiling. The law also requires businesses to implement reasonable security measures to protect the personal data they handle.

Who must comply with the Oregon Consumer Privacy Act (OCPA)?

Similar to many other US state-level data privacy laws, the OCPA establishes thresholds for establishing which organizations must comply with its requirements. However, unlike some other laws, it does not contain a revenue-only threshold.

To fall under the OCPA’s scope, during a calendar year an organization must control or process the personal data of:

- 100,000 consumers, not including consumers only completing payment transactionsor

or

- 25,000 consumers if 25 percent or more of the organization’s annual gross revenue comes from selling personal data

Exemptions to OCPA compliance

The OCPA is different from some other data privacy laws because many of its exemptions focus on the types of data being processed and what processing activities are being conducted, rather than just on the organizations themselves.

For example, instead of exempting healthcare entities under the Health Insurance Portability and Accountability Act (HIPAA), the OCPA exempts protected health information handled in compliance with HIPAA. This means protected health information is outside of the OCPA’s scope, but other data that a healthcare organization handles could still fall under the law. Organizations that may be exempt from compliance with other state-level consumer privacy laws should consult a qualified legal professional to determine if they are required to comply with the OCPA.

Exempted organizations and their services or activities include:

- Governmental agencies

- Consumer reporting agencies

- Financial institutions regulated by the Bank Act and their affiliates or subsidiaries, provided they focus exclusively on financial activities

- Insurance companies

- Nonprofit organizations established to detect and prevent insurance fraud

- Press, wire, or other information services (and the non-commercial activities of media entities)

Personal data collected, processed, sold, or disclosed under the following federal laws is also exempt from the OCPA’s scope:

- Health Insurance Portability and Accountability Act (HIPAA)

- Gramm-Leach-Bliley Act (GLBA)

- Health Care Quality Improvement Act

- Fair Credit Reporting Act (FCRA)

- Driver’s Privacy Protection Act

- Family Educational Rights and Privacy Act (FERPA)

- Airline Deregulation Act

Definitions in the Oregon Consumer Privacy Act (OCPA)

This Oregon data privacy law defines several key terms related to the data it protects and relevant data processing activities.

What is personal data under the OCPA?

The Oregon privacy law protects consumers’ personal data, which it defines as “data, derived data or any unique identifier that is linked to or is reasonably linkable to a consumer or to a device that identifies, is linked to or is reasonably linkable to one or more consumers in a household.”

The law specifically excludes personal data that is:

- Deidentified data

- made legally available through government records or widely distributed media

- made public by the consumer

The law does not specifically list what constitutes personal data. Common types of personal data that businesses collect include a consumer’s name, phone number, email address, Social Security Number, or driver’s license number.

It should be noted that personal data (also called personal information under some state privacy laws) and personally identifiable information are not always the same thing, and distinctions between the two are often made in data privacy laws.

What is sensitive data under the OCPA?

Sensitive data is personal data that requires special handling because it could cause harm or embarrassment if misused or unlawfully accessed. It refers to personal data that would reveal an individual’s:

- Racial or ethnic background

- National origin

- Religious beliefs

- Mental or physical condition or diagnosis

- Genetic or biometric data

- Sexual orientation

- Status as transgender or non-binary

- Status as a victim of crime

- Citizenship or immigration status

- Precise present or past geolocation (within 1,750 feet or 533.4 meters)

All personal data belonging to children is also considered sensitive data under the OCPA.

Oregon’s law is the first of the US privacy laws to include either transgender or non-binary gender expression or the status as a victim of crime as sensitive data. The definition of biometric data excludes facial geometry or mapping unless it is done for the purpose of identifying an individual.

An exception to the law’s definition of sensitive data includes “the content of communications or any data generated by or connected to advanced utility metering infrastructure systems or equipment for use by a utility.” In other words, the law does not consider sensitive information to include communications content, like that in emails or messages, or data generated by smart utility meters and related systems used by utilities.

What is consent under the OCPA?

Like many other data privacy laws, the Oregon data privacy law follows the European Union’s General Data Protection Regulation (GDPR) regarding the definition of valid consent. Under the OCPA, consent is “an affirmative act by means of which a consumer clearly and conspicuously communicates the consumer’s freely given, specific, informed and unambiguous assent to another person’s act or practice…”

The definition also includes conditions for valid consent:

- the consumer’s inaction does not constitute consent

- the user interface used to request consent must not attempt to obscure, subvert, or impair the consumer’s choice

These conditions are highly relevant to online consumers and reflect that the use of manipulative dark patterns are increasingly frowned upon by data protection authorities, and increasingly prohibited. The Oregon Department of Justice (DOJ) website also clarifies that the use of dark patterns may be considered a deceptive business practice under Oregon’s Unlawful Trade Practices Act.

What is processing under the OCPA?

Processing under the OCPA means any action or set of actions performed on personal data, whether manually or automatically. This includes activities like collecting, using, storing, disclosing, analyzing, deleting, or modifying the data.

Who is a controller under the OCPA?

The OCPA uses the term “controller” to describe businesses or entities that decide how and why personal data is processed. While the law uses the word “person,” it applies broadly to both individuals and organizations.

The OCPA definition of controller is “a person that, alone or jointly with another person, determines the purposes and means for processing personal data.” In simpler terms, a controller is anyone who makes the key decisions about why personal data is collected and how it will be used.

Who is a processor under the OCPA?

The OCPA defines a processor as “a person that processes personal data on behalf of a controller.” Like the controller, while the law references a person, it typically refers to businesses or organizations that handle data for a controller. Processors are often third parties that follow the controller’s instructions for handling personal data. These third parties can include advertising partners, payment processors, or fulfillment companies, for example. Their role is to carry out specific tasks without deciding how or why the data is processed.

What is profiling under the OCPA?

Profiling is increasingly becoming a standard inclusion in data privacy laws, particularly as it can relate to “automated decision-making” or the use of AI technologies. The Oregon privacy law defines profiling as “an automated processing of personal data for the purpose of evaluating, analyzing or predicting an identified or identifiable consumer’s economic circumstances, health, personal preferences, interests, reliability, behavior, location or movements.”

What is targeted advertising under the OCPA?

Targeted advertising may involve emerging technologies like AI tools. It is also becoming a standard inclusion in data privacy laws. The OCPA defines targeted advertising as advertising that is “selected for display to a consumer on the basis of personal data obtained from the consumer’s activities over time and across one or more unaffiliated websites or online applications and is used to predict the consumer’s preferences or interests.” In simpler terms, targeted advertising refers to ads shown to a consumer based on their interests, which are determined by personal data that is collected over time from different websites and apps.

However, some types of ads are excluded from this definition, such as those that are:

- Based on activities within a controller’s own websites or online apps

- Based on the context of a consumer’s current search query, visit to a specific website, or app use

- Shown in response to a consumer’s request for information or feedback

The definition also excludes processing of personal data solely to measure or report an ad’s frequency, performance, or reach.

What is a sale under the OCPA?

The OCPA defines sale as “the exchange of personal data for monetary or other valuable consideration by the controller with a third party.” This means a sale doesn’t have to involve money. Any exchange of data for something of value, even if it’s non-monetary, qualifies as a sale under the law.

The Oregon privacy law does not consider the following disclosures of personal data to be a “sale”:

- Disclosures to a processor

- Disclosures to an affiliate or a third party to help the controller provide a product or service requested by the consumer

- Disclosures or transfers of personal data as part of a merger, acquisition, bankruptcy, or similar transaction in which a third party takes control of the controller’s assets, including personal data

- Disclosures of personal data that occur because the consumer:

- directs the controller to disclose the data

- intentionally discloses the data while directing the controller to interact with a third party

- intentionally discloses the data to the public, such as through mass media, without restricting the audience

Consumers’ rights under the Oregon Consumer Privacy Act (OCPA)

The Oregon privacy law grants consumers a range of rights over their personal data, comparable to other US state-level privacy laws.

- Right to access: consumers can request confirmation of whether their personal data is being processed and the categories of personal data being processed, gain access to the data, and receive a list of the specific third parties it has been shared with (other than natural persons), all subject to some exceptions.

- Right to correction: consumers can ask controllers to correct inaccurate or outdated information they have provided.

- Right to deletion: consumers can request the deletion of their personal data held by a controller, with some exceptions.

- Right to portability: consumers can obtain a copy of the personal data they have provided to a controller, in a readily usable format, with some exceptions.

- Right to opt out: consumers can opt out of the sale of their personal data, targeted advertising, or profiling used for decisions with legal or similarly significant effects.

Consumers can designate an authorized agent to opt out of personal data processing on their behalf. The OCPA also introduces a requirement for controllers to to recognize universal opt-out signals, further simplifying the opt-out process.

This Oregon data privacy law stands out by giving consumers the right to request a specific list of third parties that have received their personal data. Unlike many other privacy laws, this one requires controllers to maintain detailed records of the exact entities they share data with, rather than just general categories of recipients.

Children’s personal data has special protections under the OCPA. Parents or legal guardians can exercise rights for children under the age of 13, whose data is classified as sensitive personal data and subject to stricter rules. For minors between 13 and 15, opt-in consent is required for specific processing activities, including its use for targeted advertising or profiling. “Opt-in” means that explicit consent is required before the data can be used for these purposes.

Consumers can make one free rights request every 12 months, to which an organization has 45 days to respond. They can extend that period by another 45 days if reasonably necessary. Organizations can deny consumer requests for a number of reasons. These include cases in which the consumer’s identity cannot reasonably be verified, or if the consumer has made too many requests within a 12-month period.

Oregon’s privacy law does not include private right of action, so consumers cannot sue data controllers for violations. California remains the only state that allows this provision.

What are the privacy requirements under the Oregon Consumer Privacy Act (OCPA)

Controllers must meet the following OCPA requirements to protect the personal data they collect from consumers.

Privacy notice and transparency under the OCPA

The Oregon privacy law requires controllers to be transparent about their data handling practices. Controllers must provide a clear, easily accessible, and meaningful privacy notice for consumers whose personal data they may process. The privacy notice, also known as the privacy policy, must include the following:

- Purpose(s) for processing personal data

- Categories of personal data processed, including the categories of sensitive data

- Categories of personal data shared with third parties, including categories of sensitive data

- Categories of third parties with which the controller shares personal data and how each third party may use the data

- How consumers can exercise their rights, including:

- How to opt out of processing for targeted advertising or profiling

- How to submit a consumer rights request

- How to appeal a controller’s denial of a rights-related request

- The identity of the controller, including any business name the controller uses or has registered in Oregon

- At least one actively monitored online contact method, such as an email address, for consumers to directly contact the organization

- A “clear and conspicuous description” for any processing of personal data for the purpose of targeted advertising or profiling “in furtherance of decisions that produce legal effects or effects of similar significance”

According to the Oregon DOJ website, the third-party categories requirement must strike a particular balance. It should offer consumers meaningful insights into the relevant types of businesses or processing activities, without making the privacy notice overly complex. Acceptable examples include ”analytics companies,” “third-party advertisers,” and ”payment processors,” among others.

The privacy notice or policy must be easy for consumers to access. It is typically linked in the website footer for visibility and accessibility from any page.

Data minimization and purpose limitation under the OCPA

The OCPA requires controllers to limit the personal data they collect to only what is “adequate, relevant, and reasonably necessary” for the purposes stated in the privacy notice. If the purposes for processing change, controllers must notify consumers and, where applicable, obtain their consent.

Data security under the OCPA

The Oregon data privacy law requires controllers to establish, implement, and maintain reasonable safeguards for protecting “the confidentiality, integrity and accessibility” of the personal data under their control. The data security measures also apply to deidentified data.

Oregon’s existing laws about privacy practices remain in effect as well. These laws include requirements for reasonable administrative, technical, and physical safeguards for data storage and handling, IoT device security features, and truth in privacy and consumer protection notices.

Data protection assessments (DPA) under the OCPA

Controllers must perform data protection assessments (DPA), also known as data protection impact assessments, for processing activities that present “a heightened risk of harm to a consumer.” These activities include:

- Processing for the purposes of targeted advertising

- Processing sensitive data

- The sale of personal data

- Processing for the purposes of profiling if there is a reasonably foreseeable risk to the consumer of:

- Unfair or deceptive treatment

- Financial, physical, or reputational injury

- Intrusion into a consumer’s private affairs

- Other substantial injury

The Attorney General may also require a data controller to conduct a DPA or share the results of one in the course of an investigation.

Consent requirements under the OCPA

The OCPA primarily uses an opt-out consent model. This means that in most cases controllers are not required to obtain consent from consumers before collecting or processing their personal data. However, there are specific cases where consent is required:

- Processing sensitive data requires explicit consent from consumers.

- For children’s data, the OCPA follows the federal Children’s Online Privacy Protection Act (COPPA) and requires consent from a parent or legal guardian before processing the personal data of any child under 13.

- Controllers must obtain explicit consent to use the personal data of minors between the ages of 13 and 15 for targeted ads, profiling, or sale.

- Controllers must obtain consent to use personal data for purposes other than those originally disclosed in the privacy notice.

To help consumers to make informed decisions about their consent, controllers must clearly disclose details about the personal data being collected, the purposes for which it is processed, who it is shared with, and how consumers can exercise their rights. Controllers must also provide clear, accessible information on how consumers can opt out of data processing.

Consumers must be able to revoke consent at any time, as easily as they gave it. Data processing must stop after consent has been revoked, and no later than 15 days after receiving the revocation.

Nondiscrimination under the OCPA

The OCPA prohibits controllers from discriminating against consumers who exercise their rights under the law. This includes actions such as:

- Denying goods or services

- Charging different prices or rates than those available to other consumers

- Providing a different level of quality or selection of goods or services to the consumer

For example, if a consumer opts out of data processing on a website, that individual cannot be blocked from accessing that website or its functions.

Some website features and functions do not work without certain cookies or trackers being activated, so if a consumer does not opt in to their use because they collect personal data, the site may not work as intended. This is not considered discriminatory.

This Oregon privacy law permits website operators and other controllers to offer voluntary incentives for consumers’ participation in activities where personal data is collected. These may include newsletter signups, surveys, and loyalty programs. Offers must be proportionate and reasonable to the request as well as the type and amount of data collected. This way, they will not look like bribes or payments for consent, which data protection authorities frown upon.

Third party contracts under the OCPA

Before starting any data processing activities, controllers must enter into legally binding contracts with third-party processors. These contracts govern how processors handle personal data on behalf of the controller, and must include the following provisions:

- The processor must ensure that all individuals handling personal data are bound by a duty of confidentiality

- The contract must provide clear instructions for data processing, detailing:

- The nature and purpose of processing

- The types of data being processed

- The duration of the processing

- The rights and obligations of both the controller and the processor

- The processor must delete or return the personal data at the controller’s direction or after the services have ended, unless legal obligations require the data to be retained

- Upon request, the processor must provide the controller with all necessary information to verify compliance with contractual obligations

- If the processor hires subcontractors, they must have contracts in place requiring the subcontractors to meet the processors’ obligations

- The contract must allow the controller or their designee to conduct assessments of the processor’s policies and technical measures to ensure compliance

These contracts are known as data processing agreements under some data protection regulations like the GDPR.

Universal opt-out mechanism under the OCPA

As of January 1, 2026, organizations subject to the OCPA must comply with a universal opt-out mechanism. Also called a global opt-out signal, it includes tools like the Global Privacy Control.

This mechanism enables a consumer to set their data processing preferences once and have those preferences automatically communicated to any website or platform that detects the signal. Preferences are typically set via a web browser plugin.

While this requirement is not yet standard across all US or global data privacy laws, it is becoming more common in newer legislation. Other states that require controllers to recognize global opt-out signals include California, Minnesota, Nebraska, Texas, and Delaware.

How to comply with the Oregon Consumer Privacy Act (OCPA)

Below is a non-exhaustive checklist to help your business and website address key OCPA requirements. For advice specific to your organization, consulting a qualified legal professional is strongly recommended.

- Provide a clear and accessible privacy notice detailing data processing purposes, shared data categories, third-party recipients, and consumer rights.

- Maintain a specific list of third parties with whom you share consumers’ personal data.

- Limit data collection to what is necessary for the specified purposes, and notify consumers if those purposes change.

- Obtain consent from consumers if you plan to process their data for purposes other than those that have been communicated to them.

- Implement reasonable safeguards to protect the confidentiality, integrity, and accessibility of personal and deidentified data.

- Conduct data protection assessments for processing activities with heightened risks, such as targeted advertising, activities involving sensitive data, or profiling.

- Implement a mechanism for consumers to exercise their rights, and communicate this mechanism to consumers.

- Obtain explicit consent for processing sensitive data, children’s data, or for purposes not initially disclosed.

- Provide consumers with a user-friendly method to revoke consent.

- Once consumers withdraw consent, stop all data processing related to that consent within the required 15-day period.

- Provide a simple and clear method for consumers to opt out of data processing activities.

- Avoid discriminatory practices against consumers exercising their rights, while offering reasonable incentives for data-related activities.

- Include confidentiality, compliance obligations, and terms for data return or deletion in binding contracts with processors.

- Comply with global opt-out signals like the Global Privacy Control by January 1, 2026.

Enforcement of the Oregon Consumer Privacy Act (OCPA)

The Oregon Attorney General’s office is the enforcement authority for the OCPA. Consumers can file complaints with the Attorney General regarding data processing practices or the handling of their requests. The Attorney General’s office must notify an organization of any complaint and in the event that an investigation is launched. During investigations, the Attorney General can request controllers to submit data protection assessments and other relevant information. Enforcement actions must be initiated within five years of the last violation.

Controllers have the right to have an attorney present during investigative interviews and can refuse to answer questions. The Attorney General cannot bring in external experts for interviews or share investigation documents with non-employees.

Until January 1, 2026, controllers have a 30-day cure period during which they can fix OCPA violations. If the issue is not resolved within this time, the Attorney General may pursue civil penalties. The right to cure sunsets January 1, 2026, after which the opportunity to cure will only be at the discretion of the Attorney General.

Fines and penalties for noncompliance under the OCPA

The Attorney General can seek civil penalties up to USD 7,500 per violation. Additional actions may include seeking court orders to stop unlawful practices, requiring restitution for affected consumers, or reclaiming profits obtained through violations.

If the Attorney General succeeds, the court may require the violating party to cover legal costs, including attorney’s fees, expert witness fees, and investigation expenses. However, if the court determines that the Attorney General pursued a claim without a reasonable basis, the defendants may be entitled to recover their attorney’s fees.

How does the Oregon Consumer Privacy Act (OCPA) affect businesses?

The OCPA introduces privacy law requirements that are similar to other state data protection laws. These include obligations around notifying consumers about data practices, granting them access to their data, limiting data use to specific purposes, and implementing reasonable security measures.

One notable distinction is that the law sets different compliance timelines based on an organization’s legal status. The effective date for commercial entities is July 1, 2024, while nonprofit organizations are given an additional year and must comply by July 1, 2025.

Since the compliance deadline for commercial entities has already passed, businesses that fall under the OCPA’s scope should ensure they meet its requirements as soon as possible to avoid penalties. Nonprofits, though they have more time, should actively prepare for compliance.

Businesses covered by federal laws like HIPAA and the GLBA, which may exempt them from other state data privacy laws, should confirm with a qualified legal professional whether they need to comply with the OCPA.

The Oregon Consumer Privacy Act (OCPA) and consent management

Oregon’s law is based on an opt-out consent model. In other words, consent does not need to be obtained before collecting or processing personal data unless it is sensitive or belongs to a child.

Processors do need to inform consumers about what data is collected and used and for what purposes, as well as with whom it is shared, and if it is to be sold or used for targeted advertising or profiling.

Consumers must also be informed of their rights regarding data processing and how to exercise them. This includes the ability for consumers to opt out of processing of their data or change their previous consent preferences. Typically, this information is presented on a privacy page, which must be kept up to date.

As of 2026, organizations must also recognize and respect consumers’ consent preferences as expressed via a universal opt-out signal.

Websites and apps can use a banner to inform consumers about data collection and enable them to opt out. This is typically done using a link or button. A consent management platform (CMP) like the Usercentrics CMP for website consent management or app consent management also helps to automate the detection of cookies and other tracking technologies that are in use on websites and apps.

A CMP can streamline sharing information about data categories and the specific services in use by the controller and/or processor(s), as well as third parties with whom data is shared.

The United States still only has a patchwork of state-level privacy laws rather than a single federal law. As a result, many companies doing business across the country, or foreign organizations doing business in the US, may need to comply with a variety of state-level data protection laws.

A CMP can make this easier by enabling banner customization and geotargeting. Websites can display data processing, consent information, and choices for specific regulations based on specific user location. Geotargeting can also improve clarity and user experience by presenting this information in the user’s preferred language.

Usercentrics does not provide legal advice, and information is provided for educational purposes only. We always recommend engaging qualified legal counsel or a privacy specialist regarding data privacy and protection issues and operations.

Microsoft Universal Event Tracking (UET) with Consent Mode helps businesses responsibly manage data while optimizing digital advertising efforts. UET is a tracking tool from Microsoft Advertising that collects user behavior data to help businesses measure conversions, optimize ad performance, and build remarketing strategies.

Consent Mode works alongside UET. It’s a feature that adjusts how data is collected based on user consent preferences. This functionality is increasingly important as businesses address global privacy regulations like the GDPR and CCPA.

For companies using Microsoft Ads, understanding and implementing these tools helps them prioritize user privacy, build trust, and achieve better marketing outcomes while respecting data privacy standards.

What is Microsoft UET Consent Mode?

Microsoft UET Consent Mode is a feature designed to help businesses respect user privacy while maintaining effective advertising strategies. It works alongside Microsoft Universal Event Tracking (UET) by dynamically adjusting how data is collected based on user consent.

When visitors interact with your website, Consent Mode determines whether tracking is activated or limited, depending on their preferences. For instance, if a user opts out of tracking, Consent Mode restricts data collection. This function aligns the tracking process with privacy preferences and applicable regulations.

Consent Mode supports businesses as they balance privacy expectations with effective campaign management. It also helps businesses align their data practices with Microsoft’s advertising policies and regional privacy laws to create a more transparent and user-focused approach to data management.

Why businesses need Microsoft UET Consent Mode

The role of UET in advertising

Microsoft Universal Event Tracking (UET) offers businesses the tools they need to optimize advertising strategies. With a simple tag integrated into a business’s website, UET helps advertisers monitor essential user actions like purchases, form submissions, and page views. This data is invaluable for building remarketing audiences, tracking conversions, and making data-backed decisions that improve ad performance.

However, effectively collecting and utilizing this data requires alignment with user consent preferences. Without proper consent, businesses risk operating outside privacy regulations, and could face penalties or restrictions. By integrating UET with Consent Mode, businesses can respect user choices while continuing to access the insights needed to run impactful advertising campaigns.

Challenges in advertising compliance

In today’s digital age, businesses must carefully balance data-driven advertising with growing privacy expectations. Regulations like the General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), and the California Privacy Rights Act (CPRA) have set clear rules about how user data can be collected, stored, and used. Non-compliance can lead to significant consequences, such as hefty fines, restricted access to ad platforms, or even account suspension.

Beyond financial and operational risks, non-compliance can damage a company’s reputation. When businesses fail to address privacy concerns, they risk losing customer trust—a resource that is difficult to rebuild. As users become more aware of how their data is used, businesses that fail to adopt transparent practices may struggle to retain their audience.

Enforcement of Microsoft UET Consent Mode

Microsoft Advertising is requiring that customers start to enforce explicitly obtaining and providing consent signals by May 5, 2025.

Providing consent signals enables Microsoft Ads customers to comply with the requirements of privacy laws like the GDPR, where violations can result in hefty fines and other penalties.

Obtaining explicit consent also demonstrates respect for users’ privacy and rights, building user trust. Consumers increasingly indicate concerns over access to and use of their data online.

Consent benefits advertising performance as part of your Privacy-Led Marketing strategy as well. Continue generating valuable insights into campaigns for effective targeting and conversion tracking.

Benefits of using Microsoft UET Consent Mode

By integrating Microsoft UET Consent Mode, companies can address user expectations, improve data accuracy, and create a more transparent relationship with their audience. Let’s take a closer look at the benefits of using Microsoft UET Consent Mode.

Supporting privacy regulations

Privacy laws such as the GDPR, CCPA, and the ePrivacy Directive require businesses to handle user data responsibly. Microsoft UET Consent Mode adjusts data collection practices based on user preferences, helping companies better align with these requirements. By respecting user choices, businesses can reduce the risks associated with non-compliance.

Accurate data collection

Data accuracy is a key component of any successful advertising strategy. With Consent Mode, businesses only collect insights from users who agree to data tracking. This focus helps prevent skewed data caused by collecting information from users who have not consented. These insights are therefore more reliable and actionable.

Optimized ad campaigns

Consent Mode enables businesses to continue leveraging tools like remarketing and conversion tracking while honoring user privacy preferences. This functionality helps advertisers maintain the effectiveness of their campaigns by focusing on audiences who have opted into tracking. As a result, companies can make data-driven decisions without compromising privacy.

Building trust through transparency

Demonstrating respect for user privacy goes beyond privacy compliance — it also fosters trust. Transparency about how data is collected and used enables businesses to strengthen their relationships with customers. A privacy-first approach can set companies apart in a competitive advertising environment by showing users that their choices and rights are valued.

Why use Usercentrics Web CMP with Microsoft UET Consent Mode

Usercentrics Web CMP provides businesses with a practical solution for integrating Microsoft UET with Consent Mode. By leveraging Usercentrics Web CMP’s unique features, companies can manage user consent effectively while maintaining a seamless advertising strategy.

Streamlined implementation

Usercentrics Web CMP simplifies the process of integrating Microsoft Consent Mode. With automated configuration, businesses can set up their systems quickly and focus on optimizing their campaigns without the complexities of manual implementation.

Seamless compatibility

As among the first consent management platforms to offer automated support for Microsoft Consent Mode, Usercentrics Web CMP is designed for smooth integration with Microsoft UET. This compatibility reduces technical challenges and supports reliable functionality.

Customizable consent banners

The CMP enables businesses to design consent banners that align with their branding, creating a consistent user experience. Clear, branded messaging helps communicate data collection practices effectively while maintaining professionalism.

Privacy-focused data management

Usercentrics Web CMP provides a centralized platform for managing user consent across different regions and regulations. Businesses can easily adapt to global privacy requirements and organize their data collection practices efficiently, all in one place.

How to set up Microsoft UET with Consent Mode using Usercentrics Web CMP

Usercentrics Web CMP simplifies the process of setting up Microsoft UET with Consent Mode. As the first platform to offer automated implementation of Microsoft Consent Mode, Usercentrics Web CMP enables companies to focus on their marketing efforts while managing user consent effectively.

To integrate Microsoft UET with Consent Mode using Usercentrics Web CMP, follow these steps:

For a detailed walkthrough, refer to the support article.

Adapting to Privacy-Led Marketing with Microsoft UET Consent Mode

Microsoft UET with Consent Mode, supported by Usercentrics Web CMP, provides businesses with a practical approach to balancing effective advertising with user privacy. With this solution, companies can streamline consent management, enhance their advertising strategies, and adapt to ever-changing privacy expectations.

Respecting user choices isn’t just about privacy compliance—it’s an opportunity to build trust and demonstrate a commitment to transparency. Businesses that embrace Privacy-Led Marketing position themselves as trustworthy partners in a competitive digital marketplace.

Adopting Privacy-Led Marketing does more than support long-term customer relationships. It also enables companies to responsibly leverage valuable insights to optimize their campaigns. Microsoft UET with Consent Mode and Usercentrics Web CMP together create a strong foundation for businesses to effectively navigate the intersection of privacy and performance.

The Gramm-Leach-Bliley Act (GLBA), enacted in 1999, sets standards for protecting consumer data in the United States’ financial industry. Amid growing concerns about how institutions collect, use, and share sensitive personal information, the Act was passed as part of sweeping reforms to modernize the financial services sector.